AI Becomes Infrastructure: From Coding Agents to Edge, Quantum, Biotech

Published Jan 4, 2026

If you still think AI is just autocomplete, wake up: in the two weeks from 2024-12-22 to 2025-01-04 major vendors moved AI into IDEs, repos, devices, labs and security frameworks. You’ll get what changed and what to do. JetBrains (release notes 2024-12-23) added multifile navigation, test generation and refactoring inside IntelliJ; GitHub rolled out Copilot Workspace and IDE integrations; Google and Microsoft refreshed enterprise integration patterns. Qualcomm and Nvidia updated on-device stacks (around 2024-12-22–12-23); Meta and community forks pushed sub‐3B LLaMA variants for edge use. Quantinuum reported 8 logical qubits (late 2024). DeepMind/Isomorphic and open-source projects packaged AlphaFold 3 into lab pipelines. CISA and OSS communities extended SBOM and supply‐chain guidance to models. Bottom line: AI’s now infrastructure—prioritize repo/CI/policy integration, model provenance, and end‐to‐end workflows if you want production value.

Agentic AI Is Taking Over Engineering: From Code to Incidents and Databases

Published Jan 4, 2026

If messy backfills, one-off prod fixes, and overflowing tickets keep you up, here’s what changed in the last two weeks and what to do next. Vendors and OSS shipped agentic, multi-agent coding features late Dec (Anthropic 2025-12-23; Cursor, Windsurf; AutoGen 0.4 on 2025-12-22; LangGraph 0.2 on 2025-12-21) so LLMs can plan, implement, test, and iterate across repos. On-device moves accelerated (Apple Private Cloud Compute update 2025-12-26; Qualcomm/MediaTek benchmarks mid‐Dec), making private, low-latency assistants practical. Data and migration tooling added LLM helpers (Snowflake Dynamic Tables 2025-12-23; Databricks Delta Live Tables 2025-12-21) but expect humans to own a PDCVR loop (Plan, Do, Check, Verify, Rollback). Database change management and just‐in‐time audited access got product updates (PlanetScale/Neon, Liquibase, Flyway, Teleport, StrongDM in Dec). Action: adopt agentic workflows cautiously, run AI drafts through your PDCVR and PR/audit gates, and prioritize on‐device options for sensitive code.

From PDCVR to Agent Stacks: Inside the AI Native Engineering Operating Model

Published Jan 3, 2026

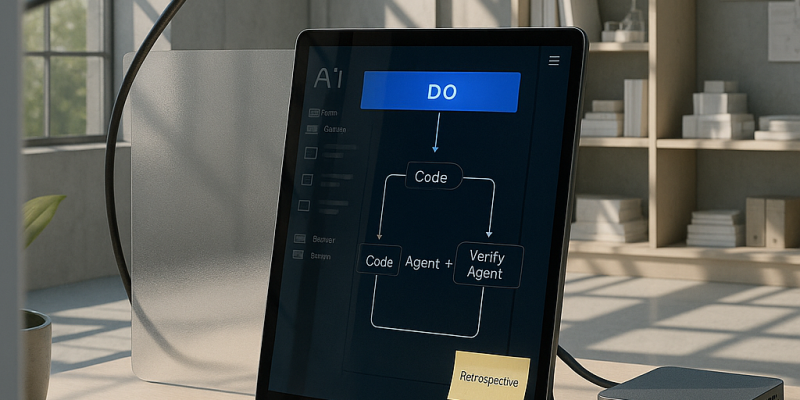

Losing engineer hours to scope creep and brittle AI hacks? Between Jan 2–3, 2026 practitioners published concrete patterns showing AI is being industrialized into an operating model you can copy. You get a PDCVR loop (Plan–Do–Check–Verify–Retrospect) around LLM coding, repo‐governed, model‐agnostic checks, and Claude Code sub‐agents for build and test; a three‐tier agent stack with folder‐level manifests and a prompt‐rewriting meta‐agent that cut typical 1–2 day tickets from ≈8 hours to ≈2–3 hours; DevScribe‐style offline workspaces that co‐host code, schemas, queries, diagrams and API tests; standardized, idempotent backfill patterns for auditable migrations; and “coordination‐aware” agents to measure the alignment tax. If you want short‐term productivity and auditable risk controls, start piloting PDCVR, repo policies, an executable workspace, and migration primitives now.

AI Becomes an Operating Layer: PDCVR, Agents, and Executable Workspaces

Published Jan 3, 2026

You’re losing hours to coordination and rework: over the last 14 days practitioners (posts dated 2026‐01‐02/03) showed how AI is shifting from a tool to an operating layer that cuts typical 1–2 day tickets from ~8 hours to ~2–3 hours. Read on and you’ll get the concrete patterns to act on: a published Plan–Do–Check–Verify–Retrospect (PDCVR) workflow (GitHub, 2026‐01‐03) that embeds tests, multi‐agent verification, and retrospects into the SDLC; folder‐level manifests plus a prompt‐rewriting meta‐agent that preserve architecture and speed execution; DevScribe‐style executable workspaces for local DB/API runs and diagrams; structured AI‐assisted data backfills; and “alignment tax” monitoring agents to surface coordination risk. For your org, the next steps are clear: pick an operating model, pilot PDCVR and folder policies in a high‐risk stack (fintech/digital‐health), and instrument alignment metrics.

AI‐Native Operating Models: PDCVR, Agent Stacks, and Executable Workspaces

Published Jan 3, 2026

Burning hours on fragile code, migrations, and alignment? In the last two weeks (posts dated 2026‐01‐02/03), practitioners sketched public blueprints showing how LLMs and agents are being embedded into real engineering work—what you’ll get here is the patterns to adopt. Engineers describe a Plan–Do–Check–Verify–Retrospect (PDCVR) loop (Claude Code, GLM‐4.7) that wraps codegen in governance and TDD; multi‐level agent stacks plus folder‐level manifests that make repos act as soft policy engines; and a meta‐agent flow that cut typical 1–2 day tasks from ~8 hours to ~2–3 hours (20‐minute prompt, 2–3 short loops, ~1 hour testing). DevScribe‐style executable workspaces, governed data‐backfill workflows, and coordination‐aware agents complete the model. Why it matters: faster delivery, clearer risk controls, and measurable “alignment tax” for regulated fintech, trading, and health teams. Immediate takeaway: start piloting PDCVR, folder policies, executable workspaces, and coordination agents.

AI‐Native Operating Models: How Agents Are Rewriting Engineering Workflows

Published Jan 3, 2026

Struggling with slow, risky engineering work? In the past 14 days (posts dated Jan 2–3, 2026) practitioners published concrete frameworks showing AI moving from toy to governed teammate—what you get here are practical primitives you can act on now. They surfaced PDCVR (Plan–Do–Check–Verify–Retrospect) as a daily, test‐driven loop for AI code, folder‐level manifests plus a prompt‐rewriting meta‐agent to keep agents aligned with architecture, and measurable wins (typical 1–2 day tasks fell from ~8 hours to ~2–3 hours). They compared executable workspaces (DevScribe) that bundle DB connectors, diagrams, and offline execution, outlined AI‐assisted, idempotent backfill patterns crucial for fintech/trading/health, and named “alignment tax” as a coordination problem agents can monitor. Bottom line: this isn’t just model choice anymore—it’s an operating‐model design problem; expect teams to adopt PDCVR, folder policies, and coordination agents next.

AI Rewrites Engineering: From Autocomplete to Operating System

Published Jan 3, 2026

Engineers are reporting a productivity and governance breakthrough: in the last 14 days (posts dated 2026‐01‐02/03) practitioners described a repeatable blueprint—PDCVR (Plan–Do–Check–Verify–Retrospect), folder‐level policies, meta‐agents, and execution workspaces like DevScribe—that moves LLMs and agents from “autocomplete” to an engineering operating model. You get concrete wins: open‐sourced PDCVR prompts and Claude Code agents on GitHub (2026‐01‐03), Plan+TDD discipline, folder manifests that prevent architectural drift, and a meta‐agent that cuts a typical 1–2 day ticket from ≈8 hours to ~2–3 hours. Teams also framed data backfills as governed workflows and named “alignment tax” as a coordination problem agents can monitor. If you care about velocity, risk, or compliance in fintech/trading/digital‐health, the immediate takeaway is clear: treat AI as an architectural question—adopt PDCVR, folder priors, executable docs, governed backfills, and alignment‐watching agents.

How AI Became the Governed Worker Powering Modern Engineering Workflows

Published Jan 3, 2026

Teams are turning AI from an oracle into a governed worker—cutting typical 1–2 day, ~8‐hour tickets to about 2–3 hours—by formalizing workflows and agent stacks. Over Jan 2–3, 2026 practitioners documented a Plan–Do–Check–Verify–Retrospect (PDCVR) loop that makes LLMs produce stepwise plans, RED→GREEN tests, self‐audits, and uses clustered Claude Code sub‐agents to run builds and verification. Folder‐level manifests plus a meta‐agent rewrite short prompts into file‐specific instructions, reducing architecture‐breaking edits and speeding throughput (≈20 minutes to craft the prompt, 2–3 short feedback loops, ~1 hour manual testing). DevScribe‐style workspaces let agents execute queries, tests and view schemas offline. The same patterns apply to data backfills and to lowering the measurable “alignment tax” by surfacing dependencies and missing reviewers. Bottom line: your advantage will come from designing the system that bounds and measures AI, not just picking a model.

From Copilot to Co‐Worker: Building an Agentic AI Operating Model

Published Jan 3, 2026

Are you watching engineering time leak into scope creep and late integrations? New practitioner posts (Reddit, Jan 2–3, 2026) show agentic AI is moving from demos to an operating model you can deploy: Plan–Do–Check–Verify–Retrospect (PDCVR) loops run with Claude Code + GLM‐4.7 and open‐source prompt and sub‐agent templates (GitHub, Jan 3, 2026). Folder‐level priors plus a prompt‐rewriting meta‐agent cut typical 1–2 day fixes from ~8 hours to ~2–3 hours. DevScribe‐style executable workspaces, data‐backfill platforms, and agents that audit coordination and alignment tax complete the stack for regulated domains like fintech and digital‐health‐ai. The takeaway: it’s no longer whether to use AI, but how to architect PDCVR, meta‐agents, folder policies, and verification workspaces into your operating model.

From Demos to Discipline: Agentic AI's New Operating Model

Published Jan 3, 2026

Tired of AI mega‐PRs and hours lost to coordination? Engineers are turning agentic AI from demos into a repeatable operating model—you're likely to see faster, auditable workflows. Over two weeks of practitioner threads (Reddit, 2026‐01‐02/03), teams described PDCVR (Plan‐Do‐Check‐Verify‐Retrospect) run with Claude Code and GLM‐4.7, folder‐level manifests plus a meta‐agent that expands terse prompts, and executable workspaces like DevScribe. The payoff: common 1–2 day tickets fell from ~8 hours to ~2–3 hours. Parallel proposals include migration platforms (idempotent jobs, central state, chunking) for safe backfills and coordination agents to track the documented “alignment tax.” Put together—structured loops, multi‐level agents, execution‐centric docs, disciplined migrations, and alignment monitoring—this is the emergent AI operating model for high‐risk domains (fintech, digital‐health, engineering).