From Models to Middleware: AI Embeds Into Enterprise Workflows

Published Jan 4, 2026

Drowning in pilot projects and vendor demos? Over late 2024–Jan 2025, major vendors moved from single “copilots” to production-ready, orchestrated AI in enterprise stacks—and here’s what you’ll get: Microsoft and Google updated agent docs and samples to favor multi-step workflows, function/tool calling, and enterprise guardrails; Qualcomm and Arm pushed concrete silicon, SDKs and drivers (Snapdragon X Elite targeting NPUs above 40 TOPS INT8) to run models on-device; DeepMind’s AlphaFold 3 and open protein models integrated into drug‐discovery pipelines; Epic/Microsoft and Google Health rolled generative documentation pilots into EHRs with time savings; Nasdaq and vendors deployed LLMs for surveillance and research; GitHub/GitLab embedded AI into SDLC; IBM and Microsoft focused quantum roadmaps on logical qubits. Bottom line: the leverage is systems and workflow design—build safe tools, observability, and platform controls, not just pick models.

AI Moves Into the Control Loop: From Agents to On-Device LLMs

Published Jan 4, 2026

Worried AI is still just hype? December’s releases show it’s becoming operational—and this summary gives you the essentials and immediate priorities. On 2024-12-19 Microsoft Research published AutoDev, an open-source framework for repo- and org-level multi-agent coding with tool integrations and human review at the PR boundary. The same day Qualcomm demoed a 700M LLM on Snapdragon 8 Elite at ~20 tokens/s and ~0.6–0.7s first-token latency at <5W. Mayo Clinic (2024-12-23) found LLM-assisted notes cut documentation time 25–40% with no significant rise in critical errors. Bayer/Tsinghua reported toxicity-prediction gains (3–7pp AUC) and potential 20–30% fewer screens. CME, GitHub, FedNow (800+ participants, +60% daily volume) and Quantinuum/Microsoft (logical error rates 10–100× lower) all show AI moving into risk, security, payments, and fault-tolerant stacks. Action: prioritize integration, validation, and human-in-loop controls.

AI Embedded: On‐Device Assistants, Agentic Workflows, and Industry Impact

Published Jan 4, 2026

Worried AI is still just a research toy? Here’s a two‐week briefing so you know what to do next. Major vendors pushed AI into devices and workflows: Apple (Dec 16) rolled out on‐device models in iOS 18.2 betas, Google tightened Gemini into Android and Workspace (Dec 18–20), and OpenAI tuned GPT‐4o mini and tool calls for low‐latency apps (Dec). Teams are building agentic SDLCs—PDCVR loops surfaced on Reddit (Jan 3) and GitHub reports AI suggestions accepted in over 30% of edits on some repos. In biotech, AI‐designed drugs hit Phase II (Insilico, Dec 19) and Exscientia cited faster cycles (Dec 17); in vivo editing groups set 2026 human data targets. Payments and markets saw FedNow adoption by hundreds of banks (Dec 23) and exchanges pushing low‐latency feeds. Immediate implications: adopt hybrid on‐device/cloud models, formalize agent guardrails, update procurement for memory‐safe tech, and prioritize reliability for real‐time rails.

Agentic AI Is Taking Over Engineering: From Code to Incidents and Databases

Published Jan 4, 2026

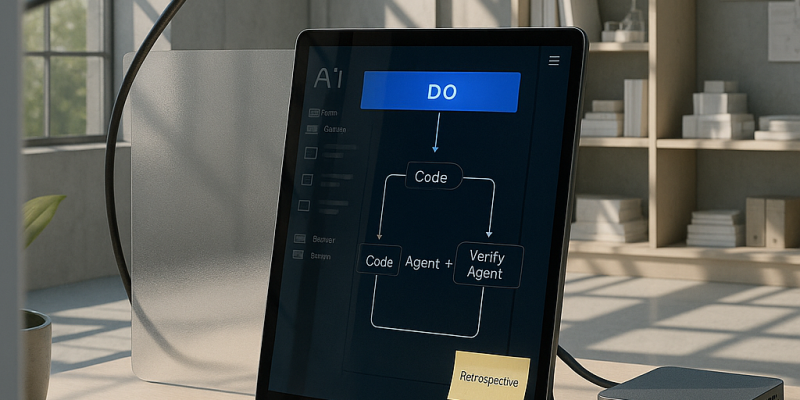

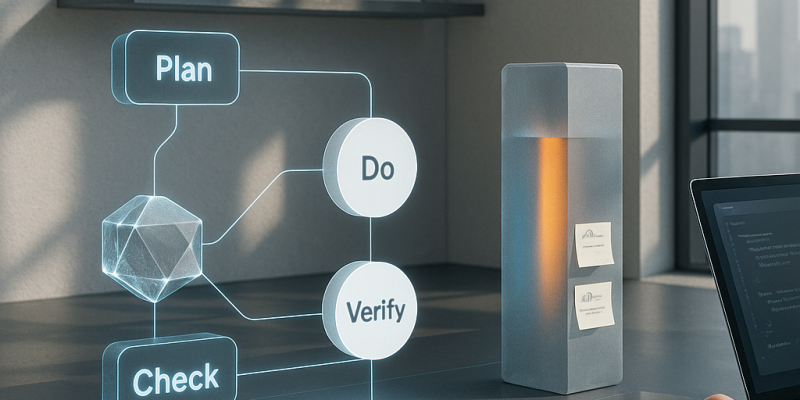

If messy backfills, one-off prod fixes, and overflowing tickets keep you up, here’s what changed in the last two weeks and what to do next. Vendors and OSS shipped agentic, multi-agent coding features late Dec (Anthropic 2025-12-23; Cursor, Windsurf; AutoGen 0.4 on 2025-12-22; LangGraph 0.2 on 2025-12-21) so LLMs can plan, implement, test, and iterate across repos. On-device moves accelerated (Apple Private Cloud Compute update 2025-12-26; Qualcomm/MediaTek benchmarks mid‐Dec), making private, low-latency assistants practical. Data and migration tooling added LLM helpers (Snowflake Dynamic Tables 2025-12-23; Databricks Delta Live Tables 2025-12-21) but expect humans to own a PDCVR loop (Plan, Do, Check, Verify, Rollback). Database change management and just‐in‐time audited access got product updates (PlanetScale/Neon, Liquibase, Flyway, Teleport, StrongDM in Dec). Action: adopt agentic workflows cautiously, run AI drafts through your PDCVR and PR/audit gates, and prioritize on‐device options for sensitive code.

AI‐Native Operating Models: How Agents Are Rewriting Engineering Workflows

Published Jan 3, 2026

Struggling with slow, risky engineering work? In the past 14 days (posts dated Jan 2–3, 2026) practitioners published concrete frameworks showing AI moving from toy to governed teammate—what you get here are practical primitives you can act on now. They surfaced PDCVR (Plan–Do–Check–Verify–Retrospect) as a daily, test‐driven loop for AI code, folder‐level manifests plus a prompt‐rewriting meta‐agent to keep agents aligned with architecture, and measurable wins (typical 1–2 day tasks fell from ~8 hours to ~2–3 hours). They compared executable workspaces (DevScribe) that bundle DB connectors, diagrams, and offline execution, outlined AI‐assisted, idempotent backfill patterns crucial for fintech/trading/health, and named “alignment tax” as a coordination problem agents can monitor. Bottom line: this isn’t just model choice anymore—it’s an operating‐model design problem; expect teams to adopt PDCVR, folder policies, and coordination agents next.

How Agentic AI Became an Engineering OS: PDCVR, Meta‐Agents, DevScribe

Published Jan 3, 2026

What if a routine 1–2 day engineering task that used to take ~8 hours now takes ≈2–3 hours? Over the last 14 days (posts dated 2026‐01‐02 and 2026‐01‐03), engineers report agentic AI entering a second phase: teams are formalizing an AI‐native operating model around PDCVR (Plan–Do–Check–Verify–Retrospect) using Claude Code and GLM‐4.7, stacking meta‐agents + coding agents constrained by folder‐level manifests, and running work in executable DevScribe‐style workspaces. That matters because it turns AI into a controllable collaborator for high‐stakes domains—fintech, trading, digital‐health—speeding delivery, enforcing invariants, enabling tested migrations, and surfacing an “alignment tax” of coordination overhead. Key actions shown: institute PDCVR loops, add repo‐level policies, deploy meta‐agents and VERIFY agents, and instrument alignment to manage risk as AI moves from experiment to production.

How AI Became the Governed Worker Powering Modern Engineering Workflows

Published Jan 3, 2026

Teams are turning AI from an oracle into a governed worker—cutting typical 1–2 day, ~8‐hour tickets to about 2–3 hours—by formalizing workflows and agent stacks. Over Jan 2–3, 2026 practitioners documented a Plan–Do–Check–Verify–Retrospect (PDCVR) loop that makes LLMs produce stepwise plans, RED→GREEN tests, self‐audits, and uses clustered Claude Code sub‐agents to run builds and verification. Folder‐level manifests plus a meta‐agent rewrite short prompts into file‐specific instructions, reducing architecture‐breaking edits and speeding throughput (≈20 minutes to craft the prompt, 2–3 short feedback loops, ~1 hour manual testing). DevScribe‐style workspaces let agents execute queries, tests and view schemas offline. The same patterns apply to data backfills and to lowering the measurable “alignment tax” by surfacing dependencies and missing reviewers. Bottom line: your advantage will come from designing the system that bounds and measures AI, not just picking a model.

Agentic AI Is Rewriting Software Operating Models

Published Jan 3, 2026

Ever lost hours to rework because an LLM dumped a giant, unreviewable PR? The article synthesizes Jan 2–3, 2026 practitioner threads into a concrete AI operating model you can use: a PDCVR (Plan–Do–Check–Verify–Retrospect) loop for Claude Code + GLM‐4.7 that enforces test‐driven steps, small diffs, agented verification (Orchestrator, DevOps, Debugger, etc.), and logged retrospectives (GitHub prompts and sub‐agents published 2026‐01‐03). It pairs temporal discipline with spatial controls: folder‐level manifests plus a meta‐agent that expands short human intents into detailed prompts—cutting typical 1–2 day tasks from ~8 hours to ~2–3 hours (20 min meta‐prompt, 2–3 feedback loops, ~1 hr manual testing). Complementary pieces: DevScribe as an offline executable cockpit (DBs, APIs, diagrams), reusable data‐migration primitives for controlled backfills, and “coordination‐watching” agents to measure the alignment tax. Bottom line: these patterns form the first AI‐native operating model—and that’s where competitive differentiation will emerge for fintech, trading, and regulated teams.

From Demos to Discipline: Agentic AI's New Operating Model

Published Jan 3, 2026

Tired of AI mega‐PRs and hours lost to coordination? Engineers are turning agentic AI from demos into a repeatable operating model—you're likely to see faster, auditable workflows. Over two weeks of practitioner threads (Reddit, 2026‐01‐02/03), teams described PDCVR (Plan‐Do‐Check‐Verify‐Retrospect) run with Claude Code and GLM‐4.7, folder‐level manifests plus a meta‐agent that expands terse prompts, and executable workspaces like DevScribe. The payoff: common 1–2 day tickets fell from ~8 hours to ~2–3 hours. Parallel proposals include migration platforms (idempotent jobs, central state, chunking) for safe backfills and coordination agents to track the documented “alignment tax.” Put together—structured loops, multi‐level agents, execution‐centric docs, disciplined migrations, and alignment monitoring—this is the emergent AI operating model for high‐risk domains (fintech, digital‐health, engineering).

From Prompts to Protocols: Agentic AI as the Engineering Operating Model

Published Jan 3, 2026

Worried AI will speed things up but add risk? In the last 14 days (Reddit threads dated 2026‐01‐02/03), engineers pushed beyond vendor hype and sketched an AI‐native operating model you can use: a Plan–Do–Check–Verify–Retrospect (PDCVR) workflow (used with Claude Code and GLM‐4.7) that treats AI coding as a governance contract, folder‐level manifests that stop agents from bypassing architecture, and a prompt‐rewriting meta‐agent that turns terse requests into executable tasks. The combo cut typical 1–2 day tasks (≈8 hours of engineer time) to about 2–3 hours. DevScribe‐style, offline executable workspaces and disciplined data backfills/migrations close gaps for regulated stacks. The remaining chokepoint is “alignment tax” — missed requirements and scope creep — so next steps are instrumenting coordination sentries and baking PDCVR and folder policies into your repo and release processes.