AI Moves From Demos to Production: Agents, On-Device Models, Lab Integration

Published Jan 4, 2026

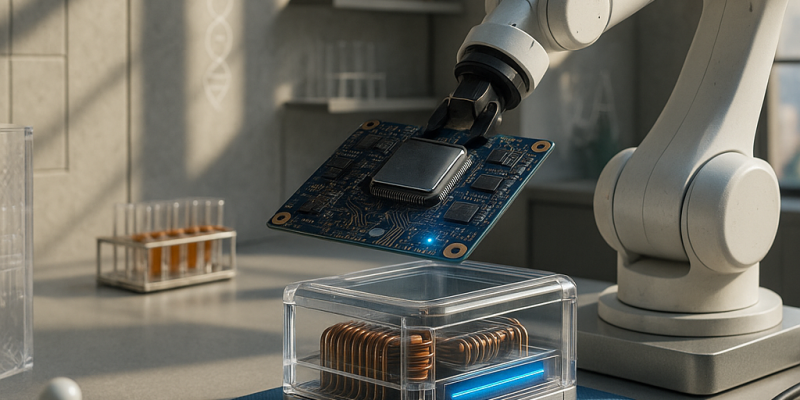

Struggling to turn year‐end pilots into production? Here’s what changed across late Dec 2024–Jan 4, 2025 and why you should care: code AI moved from inline copilots to agentic, repo‐wide refactors (GitHub Copilot Workspace, Sourcegraph, JetBrains), shifting the decision from “use autocomplete?” to “what refactors can agents do safely”; on‐device vision/multimodal models gained hardware and quantization momentum (Snapdragon X Elite, Apple Silicon, llama.cpp work) as NPUs hit ~40–45 TOPS and 7–14B models see 4–5‐bit tuning; biotech stacked generative design with automated labs (Meta ESM, Generate:Biomedicines), while gene‐editing updates tightened off‐target and immunogenicity assays; trading pushed AI closer to exchanges (Nasdaq, Equinix) for low‐latency analytics; enterprise vendors hardened AI platform governance and observability; and creative tools embedded AI into pro pipelines (Adobe, Resolve). Immediate actions: pick safe agent use cases, design on‐device/cloud splits, invest in assay and governance tooling, and plan co‐location or platform controls.

AI Moves Into Production: Agents, On-Device Models, and Enterprise Infrastructure

Published Jan 4, 2026

Struggling to turn AI pilots into reliable production? Between Dec 22, 2024 and Jan 4, 2025 major vendors moved AI from demos to infrastructure: OpenAI, Anthropic, Databricks and frameworks like LangChain elevated “agents” as orchestration layers; Apple MLX, Ollama and LM Studio cut friction for on‐device models; Azure AI Studio and Vertex AI added observability and safety; biotech firms (Insilico, Recursion, Isomorphic Labs) reported multi‐asset discovery pipelines; Radiology and Lancet Digital Health papers showed imaging AUCs commonly >0.85; CISA and security reports pushed memory‐safe languages (with 60–70% of critical bugs tied to unsafe code); quantum vendors focused on logical qubits; quant platforms added LLM‐augmented research. Why it matters: the decision is now about agent architecture, two‐tier cloud/local stacks, platform governance, and structural security. Immediate asks: pick an orchestration substrate, evaluate local model tradeoffs, bake in observability/guardrails, and prioritize memory‐safe toolchains.

From Labs to Live: AI, Quantum, and Secure Software Enter Production

Published Jan 4, 2026

Worried AI will break your ops or miss regulatory traps? In the last 14 days major vendors and research teams pushed AI from prototypes into embedded, auditable infrastructure—here’s what you need to know and do. Meta open‐sourced a multimodal protein/small‐molecule model (tech report, 2025‐12‐29) and an MIT–Broad preprint (2025‐12‐27) showed retrieval‐augmented, domain‐tuned LLMs beating bespoke bio‐models. GitHub (Copilot Agentic Flows, 2025‐12‐23) and Sourcegraph (Cody Workflows v2, 2025‐12‐27) shipped agentic dev workflows. Apple (2025‐12‐20) and Qualcomm/Samsung (2025‐12‐28) pushed phone‐class multimodal inference. IBM (2025‐12‐19) and QuTech–Quantinuum (2025‐12‐26) reported quantum error‐correction progress. Real healthcare deployments cut time‐to‐first‐read ~15–25% (Euro network, 2025‐12‐22). Actionable next steps: tighten governance and observability for agents, bind models to curated retrieval and lab/EHR workflows, and accelerate memory‐safe migration and regression monitoring.

From Demos to Infrastructure: AI Agents, Edge Models, and Secure Platforms

Published Jan 4, 2026

If you fear AI will push unsafe or costly changes into production, you're not alone—and here's what happened in the two weeks ending 2026‐01‐04 and what to do about it. Vendors and open projects (GitHub, Replit, Cursor, OpenDevin) moved agentic coding agents from chat into auditable issue→plan→PR workflows with sandboxed test execution and logs; observability vendors added LLM change telemetry. At the same time, sub‐10B multimodal models ran on device (Qualcomm NPUs at ~5–7W; Core ML/tooling updates; llama.cpp/mlc‐llm mobile optimizations), platforms consolidated via model gateways and Backstage plugins, and security shifted toward Rust/SBOM defaults. Biotech closed‐loop AI–wet lab pipelines and in‐vivo editing advances tightened experimental timelines, while quantum work pivoted to logical qubits and error correction. Why it matters: faster iteration, new privacy/latency tradeoffs, and governance/spend risks. Immediate actions: gate agentic PRs with tests and code owners, centralize LLM routing/observability, and favor memory‐safe build defaults.

On‐Device AI Goes Multimodal: Privacy, Speed, and Offline Power

Published Jan 4, 2026

3B–15B parameter models are moving on‐device, not just in the cloud—Apple’s developer docs (12/23/2024) and Snapdragon X Elite previews (late Dec–early Jan for CES) show 3B–15B and 7B–13B models running locally on A17 Pro, M‐series and NPUs with server fallbacks. What does that mean for you? Expect faster, more private, lower‐latency features in Mail, Notes and Copilot+ PCs (OEMs due early 2025), but also new constraints: energy budgets, quantization, and heterogeneous NPUs. At the same time GitHub and Datadog pushed agents into structured workflows (Dec 2024), biotech firms (Absci, Generate, Intellia) report AI‐designed candidates, and quantum and exchanges are refocusing on logical qubits and ML surveillance. Immediate takeaway: prioritize integration, efficiency, and governance—treat models as OS‐level services with SLOs and audit trails.

AI Becomes Infrastructure: From Coding Agents to Edge, Quantum, Biotech

Published Jan 4, 2026

If you still think AI is just autocomplete, wake up: in the two weeks from 2024-12-22 to 2025-01-04 major vendors moved AI into IDEs, repos, devices, labs and security frameworks. You’ll get what changed and what to do. JetBrains (release notes 2024-12-23) added multifile navigation, test generation and refactoring inside IntelliJ; GitHub rolled out Copilot Workspace and IDE integrations; Google and Microsoft refreshed enterprise integration patterns. Qualcomm and Nvidia updated on-device stacks (around 2024-12-22–12-23); Meta and community forks pushed sub‐3B LLaMA variants for edge use. Quantinuum reported 8 logical qubits (late 2024). DeepMind/Isomorphic and open-source projects packaged AlphaFold 3 into lab pipelines. CISA and OSS communities extended SBOM and supply‐chain guidance to models. Bottom line: AI’s now infrastructure—prioritize repo/CI/policy integration, model provenance, and end‐to‐end workflows if you want production value.