From Demos to Infrastructure: AI Agents, Edge Models, and Secure Platforms

Published Jan 4, 2026

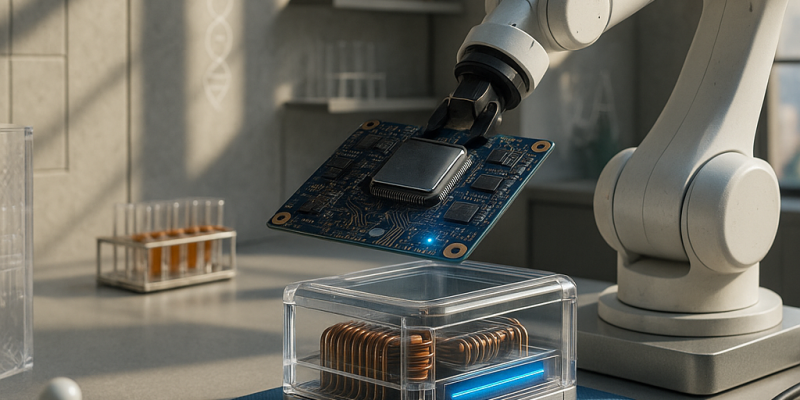

If you fear AI will push unsafe or costly changes into production, you're not alone—and here's what happened in the two weeks ending 2026‐01‐04 and what to do about it. Vendors and open projects (GitHub, Replit, Cursor, OpenDevin) moved agentic coding agents from chat into auditable issue→plan→PR workflows with sandboxed test execution and logs; observability vendors added LLM change telemetry. At the same time, sub‐10B multimodal models ran on device (Qualcomm NPUs at ~5–7W; Core ML/tooling updates; llama.cpp/mlc‐llm mobile optimizations), platforms consolidated via model gateways and Backstage plugins, and security shifted toward Rust/SBOM defaults. Biotech closed‐loop AI–wet lab pipelines and in‐vivo editing advances tightened experimental timelines, while quantum work pivoted to logical qubits and error correction. Why it matters: faster iteration, new privacy/latency tradeoffs, and governance/spend risks. Immediate actions: gate agentic PRs with tests and code owners, centralize LLM routing/observability, and favor memory‐safe build defaults.

AI Goes Operational: Multimodal Agents, Quantum Gains, and Biotech Pipelines

Published Jan 4, 2026

Worried your AI pilots won’t scale into real workflows? Here’s what happened in late‐Dec 2024–early‐Jan 2025 and why you should care: Google rolled out Gemini 2.0 Flash/Nano (12‐23‐2024) to enable low‐latency, on‐device multimodal agents that call tools; OpenAI’s o3 (announced 12‐18‐2024) surfaced as a slower but more reliable backend reasoning engine in early benchmarks; IBM and Quantinuum shifted attention to logical qubits and error‐corrected performance; biotech firms moved AI design into LIMS‐connected pipelines with AI‐initiated candidates heading toward human trials (year‐end 2024/early 2025); healthcare imaging AIs gained regulatory clearances and EHR‐native scribes showed time‐savings; fintech and quant teams embedded LLMs into surveillance and research; platform engineering and security patterns converged. Bottom line: models are becoming components in governed systems—so prioritize systems thinking, integration depth, human‐in‐the‐loop safety, and independent benchmarking.

AI Goes Operational: Agentic Coding, On-Device Models, Drug Discovery

Published Jan 4, 2026

55% faster coding? That's the shake-up: in late Dec 2025–early Jan 2026 vendors moved AI from demos into production workflows, and you need to know what to act on. GitHub (2025-12-23) rolled Copilot for Azure/Microsoft 365 and started Copilot Workspace private previews in the last 14 days for “issue‐to‐PR” agentic flows; Microsoft reports 55% faster completion for some tasks. Edge vendors showed concrete on-device wins—Qualcomm cites up to 45 TOPS for NPUs, community tests (2025-12-25–2026-01-04) ran Llama 3.2 3B/8B with 2,000 AI‐designed compounds; healthcare and vendors report >90% metrics and scribes saving 5–7 minutes per visit. Exchanges process billions of messages daily; quantum and security updates emphasize logical qubits and memory-safe language migrations. Bottom line: shift from “can it?” to “how do we integrate, govern, and observe it?”

From Demos to Production: AI Becomes Core Infrastructure Across Industries

Published Jan 4, 2026

Worried AI pilots will break your repo or your compliance? In the last two weeks (late Dec 2025–early Jan 2026) vendors pushed agentic, repo‐wide coding tools (GitHub Copilot Workspace, Sourcegraph Cody, Tabnine, JetBrains) into structured pilots; on‐device multimodal models hit practical latencies (Qualcomm, Apple, community toolchains); AI became treated as first‐class infra (Humanitec, Backstage plugins; Arize, LangSmith, W&B observability); quantum announcements emphasized logical qubits and error‐correction; pharma and protein teams reported end‐to‐end AI discovery pipelines; brokers tightened algorithmic trading guardrails; governments and OSS groups pushed memory‐safe languages and SBOMs; and creative suites integrated AI as assistive features with provenance. What to do now: pilot agents with strict review/audit, design hybrid on‐device/cloud flows, platformize AI telemetry and governance, adopt memory‐safe/supply‐chain controls, and track logical‐qubit roadmaps for timing.

AI Moves Into the Control Loop: From Agents to On-Device LLMs

Published Jan 4, 2026

Worried AI is still just hype? December’s releases show it’s becoming operational—and this summary gives you the essentials and immediate priorities. On 2024-12-19 Microsoft Research published AutoDev, an open-source framework for repo- and org-level multi-agent coding with tool integrations and human review at the PR boundary. The same day Qualcomm demoed a 700M LLM on Snapdragon 8 Elite at ~20 tokens/s and ~0.6–0.7s first-token latency at <5W. Mayo Clinic (2024-12-23) found LLM-assisted notes cut documentation time 25–40% with no significant rise in critical errors. Bayer/Tsinghua reported toxicity-prediction gains (3–7pp AUC) and potential 20–30% fewer screens. CME, GitHub, FedNow (800+ participants, +60% daily volume) and Quantinuum/Microsoft (logical error rates 10–100× lower) all show AI moving into risk, security, payments, and fault-tolerant stacks. Action: prioritize integration, validation, and human-in-loop controls.

Multimodal AI Is Becoming the Universal Interface for Complex Workflows

Published Dec 6, 2025

If you’re tired of stitching OCR, ASR, vision models, and LLMs together, pay attention: in the last 14 days major providers pushed multimodal APIs and products into broad preview or GA, turning “nice demos” into a default interface layer. You’ll get models that accept text, images, diagrams, code, audio, and video in one call and return text, structured outputs (JSON/function calls), or tool actions — cutting brittle pipelines for engineers, quants, fintech teams, biotech labs, and creatives. Key wins: cross‐modal grounding, mixed‐format workflows, structured tool calling, and temporal video reasoning. Key risks: harder evaluation, more convincing hallucinations, and PII/compliance challenges that may force on‐device or on‐prem inference. Watch for multimodal‐default SDKs, agent frameworks with screenshot/PDF/video support, and domain benchmarks; immediate moves are to think multimodally, redesign interfaces, and add validation/safety layers.

Why Small, On‐Device "Distilled" AI Will Replace Cloud Giants

Published Dec 6, 2025

Cloud inference bills and GPU scarcity are squeezing margins — want a cheaper, faster alternative? Over the past two weeks research releases, open‐source projects, and hardware roadmaps have pushed the industrialization of distilled, on‐device and domain‐specific AI. Large teachers (100B+ params) are being compressed into student models (often 1–3B) via int8/int4/binary quantization and pruning to meet targets like <50 ms latency and <1 GB RAM, running on NPUs and compact accelerators (tens of TOPS). That matters for fintech, trading, biotech, devices, and developer tooling: lower latency, better privacy, easier regulatory proofs, and offline operation. Immediate actions: build distillation + evaluation pipelines, adopt model catalogs and governance, and treat model SBOMs as security hygiene. Watch for risks: harder benchmarking, fragmentation, and supply‐chain tampering. Mastering this will be a 2–3 year competitive edge.

Programmable Sound: AI Foundation Models Are Rewriting Music and Game Audio

Published Dec 6, 2025

Tired of wrestling with flat, uneditable audio tracks? Over the last 14 days major labs and open‐source communities converged on foundation audio models that treat music, sound and full mixes as editable, programmable objects—backed by code, prompts and real‐time control—here’s what that means for you. These scene‐level, stem‐aware models can separate/generate stems, respect structure (intro/verse/chorus), follow MIDI/chord constraints, and edit parts non‐destructively. That shift lets artists iterate sketches and swap drum textures without breaking harmonies, enables adaptive game and UX soundtracks, and opens audio agents for live scoring or auto‐mixing. Risks: style homogenization, data provenance and legal ambiguity, and latency/compute tradeoffs. Near term (12–24 months) action: treat models as idea multipliers, invest in unique sound data, prioritize controllability/low‐latency integrations, and add watermarking/provenance for safety.

Edge AI Meets Quantum: MMEdge and IBM Reshape the Future

Published Nov 19, 2025

Latency killing your edge apps? Read this: two near-term advances could change where AI runs. MMEdge (arXiv:2510.25327) is a recent on‐device multimodal framework that pipelines sensing and encoding, uses temporal aggregation and speculative skipping to start inference before full inputs arrive, and—tested in a UAV and on standard datasets—cuts end‐to‐end latency while keeping accuracy. IBM unveiled Nighthawk (120 qubits, 218 tunable couplers; up to 5,000 two‐qubit gates; testing late 2025) and Loon (112 qubits, six‐way couplers) as stepstones toward fault‐tolerant QEC and a Starling system by 2029. Why it matters to you: faster, deterministic edge decisions for AR/VR, drones, medical wearables; new product and investment opportunities; and a need to track edge latency benchmarks, early quantum demos, and hardware–software co‐design.

Google Unveils Gemini 3.0 Pro: 1T-Parameter, Multimodal, 1M-Token Context

Published Nov 18, 2025

Worried your AI can’t handle whole codebases, videos, or complex multi-step reasoning? Here’s what to expect: Google announced Gemini 3.0 Pro / Deep Think, a >1 trillion-parameter Mixture-of-Experts model (about 15–20B experts active per query) with native text/image/audio/video inputs, two context tiers (200,000 and 1,000,000 tokens), and stronger agentic tool use. Benchmarks in the article show GPQA Diamond 91.9%, Humanity’s Last Exam 37.5% without tools and 45.8% with tools, and ScreenSpot-Pro 72.7%. Preview access opened to select enterprise users via API in Nov‐2025, with broader release expected Dec‐2025 and general availability early 2026. Why it matters: you can build longer, multimodal, reasoning-heavy apps, but plan for higher compute/latency, privacy risks from audio/video, and robustness testing. Immediate watch items: independent benchmark validation, tooling integration, pricing for 200k vs 1M tokens, and modality-specific safety controls.