From Demos to Infrastructure: AI Agents, Edge Models, and Secure Platforms

Published Jan 4, 2026

If you fear AI will push unsafe or costly changes into production, you're not alone—and here's what happened in the two weeks ending 2026‐01‐04 and what to do about it. Vendors and open projects (GitHub, Replit, Cursor, OpenDevin) moved agentic coding agents from chat into auditable issue→plan→PR workflows with sandboxed test execution and logs; observability vendors added LLM change telemetry. At the same time, sub‐10B multimodal models ran on device (Qualcomm NPUs at ~5–7W; Core ML/tooling updates; llama.cpp/mlc‐llm mobile optimizations), platforms consolidated via model gateways and Backstage plugins, and security shifted toward Rust/SBOM defaults. Biotech closed‐loop AI–wet lab pipelines and in‐vivo editing advances tightened experimental timelines, while quantum work pivoted to logical qubits and error correction. Why it matters: faster iteration, new privacy/latency tradeoffs, and governance/spend risks. Immediate actions: gate agentic PRs with tests and code owners, centralize LLM routing/observability, and favor memory‐safe build defaults.

From Labs to Devices: AI and Agents Become Operational Priorities

Published Jan 4, 2026

Worried your AI pilots stall at deployment? In the past 14 days major vendors pushed capabilities that make operationalization the real battleground — here’s what to know for your roadmap. Big labs shipped on-device multimodal tools (xAI’s Grok-2-mini, API live 2025-12-23; Apple’s MLX quantization updates 2025-12-27), agent frameworks added observability and policy (Microsoft Azure AI Agents preview 2025-12-20; LangGraph RC 1.0 on 2025-12-30), and infra vendors published runbooks (HashiCorp refs 2025-12-19; Datadog LLM Observability GA 2025-12-27). Quantum roadmaps emphasize logical qubits (IBM target: 100+ logical qubits by 2029; Quantinuum reports logical error 50% on 2025-12-22; Beam showed >70% in-vivo editing on 2025-12-19; Nasdaq piloted LLM triage reducing false positives 20–30% on 2025-12-21). Bottom line: focus less on raw model quality and more on SDK/hardware integration, SRE/DevOps, observability, and governance to actually deploy value.

How GPU Memory Virtualization Is Breaking AI's Biggest Bottleneck

Published Dec 6, 2025

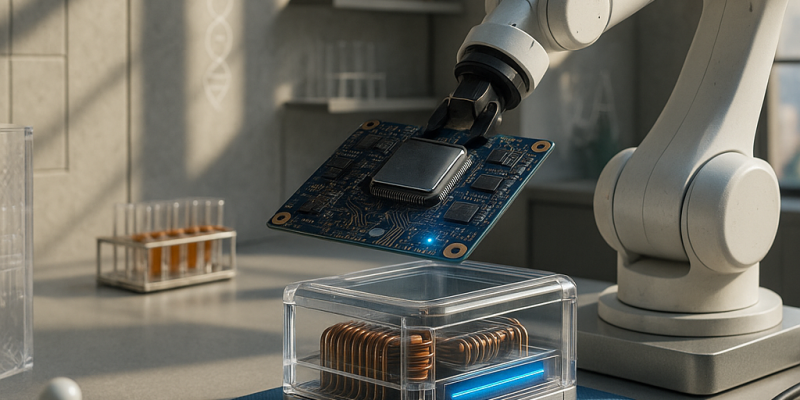

In the last two weeks GPU memory virtualization and disaggregation moved from infra curiosity to a rapid, production trend—because models and simulations increasingly need tens to hundreds of gigabytes of VRAM. Read this and you'll know what's changing, why it matters to your AI, quant, or biotech workloads, and what to do next. The core idea: software‐defined pooled VRAM—virtualized memory, disaggregated pools, and communication‐optimized tensor parallelism—makes many smaller GPUs look like one big memory space. That means you can train larger or more specialist models, host denser agentic workloads, and run bigger Monte Carlo or molecular simulations without buying a new fleet. Tradeoffs: paging latency, new failure modes, and security/isolation risks. Immediate steps: profile memory footprints, adopt GPU‐aware orchestration, refactor for sharding/checkpointing, and plan hybrid hardware generations.

America’s AI Action Plan Sparks Federal-State Regulatory Showdown

Published Nov 12, 2025

Between July and September 2025 the US reshaped its AI policy: on July 1 the Senate voted 99–1 to remove a provision that would have imposed a 10‐year ban on state AI regulation, preserving state authority; on July 23 the White House unveiled “Winning the AI Race: America’s AI Action Plan” (Executive Order 14179) with more than 90 federal actions across three pillars—accelerating innovation, building AI infrastructure, and international/security priorities—including an export strategy, streamlined data‐center permitting, deregulatory reviews, and procurement standards requiring models “free from ideological bias”; and on Sept. 29 California enacted SB 53, mandating public disclosure of safety protocols, whistleblower protections, and 15‐day reporting of “critical safety incidents.” These moves shift influence from federal bans to federal incentives and procurement, create new compliance and contracting risks for companies, and warrant immediate monitoring of Action Plan implementation, permitting reforms, procurement rules, state responses, and definitions of “ideological bias.”