AI Moves Into Production: Agents, On-Device Models, and Enterprise Infrastructure

Published Jan 4, 2026

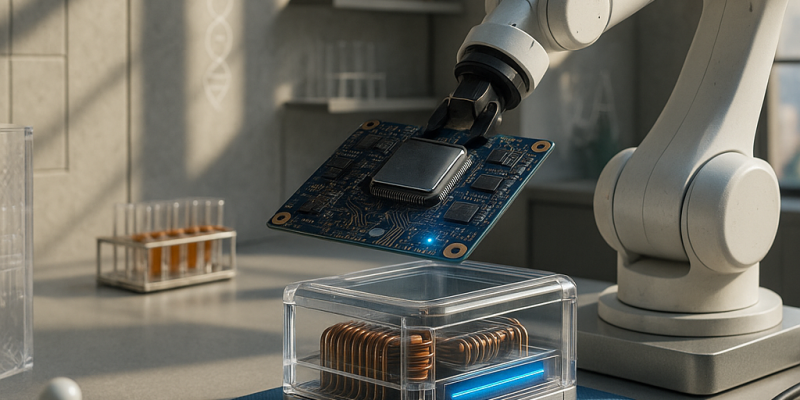

Struggling to turn AI pilots into reliable production? Between Dec 22, 2024 and Jan 4, 2025 major vendors moved AI from demos to infrastructure: OpenAI, Anthropic, Databricks and frameworks like LangChain elevated “agents” as orchestration layers; Apple MLX, Ollama and LM Studio cut friction for on‐device models; Azure AI Studio and Vertex AI added observability and safety; biotech firms (Insilico, Recursion, Isomorphic Labs) reported multi‐asset discovery pipelines; Radiology and Lancet Digital Health papers showed imaging AUCs commonly >0.85; CISA and security reports pushed memory‐safe languages (with 60–70% of critical bugs tied to unsafe code); quantum vendors focused on logical qubits; quant platforms added LLM‐augmented research. Why it matters: the decision is now about agent architecture, two‐tier cloud/local stacks, platform governance, and structural security. Immediate asks: pick an orchestration substrate, evaluate local model tradeoffs, bake in observability/guardrails, and prioritize memory‐safe toolchains.

From Demos to Infrastructure: AI Agents, Edge Models, and Secure Platforms

Published Jan 4, 2026

If you fear AI will push unsafe or costly changes into production, you're not alone—and here's what happened in the two weeks ending 2026‐01‐04 and what to do about it. Vendors and open projects (GitHub, Replit, Cursor, OpenDevin) moved agentic coding agents from chat into auditable issue→plan→PR workflows with sandboxed test execution and logs; observability vendors added LLM change telemetry. At the same time, sub‐10B multimodal models ran on device (Qualcomm NPUs at ~5–7W; Core ML/tooling updates; llama.cpp/mlc‐llm mobile optimizations), platforms consolidated via model gateways and Backstage plugins, and security shifted toward Rust/SBOM defaults. Biotech closed‐loop AI–wet lab pipelines and in‐vivo editing advances tightened experimental timelines, while quantum work pivoted to logical qubits and error correction. Why it matters: faster iteration, new privacy/latency tradeoffs, and governance/spend risks. Immediate actions: gate agentic PRs with tests and code owners, centralize LLM routing/observability, and favor memory‐safe build defaults.