AI Becomes the Engineering Runtime: PDCVR, Agent Stacks, Executable Workspaces

Published Jan 3, 2026

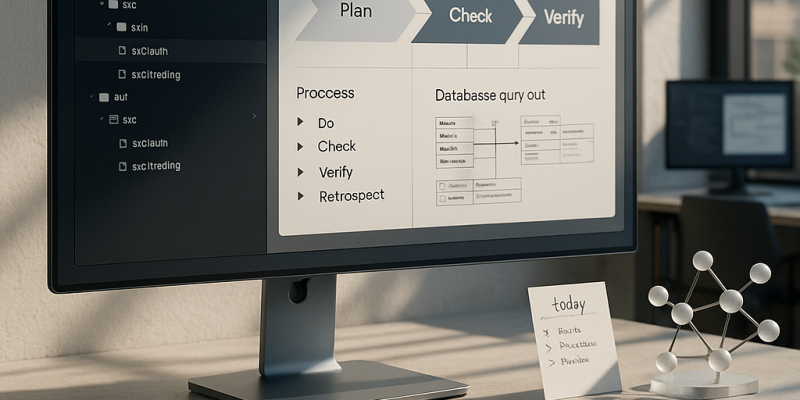

Still losing hours to rework and scope creep? New practitioner threads (Jan 2–3, 2026) show AI shifting from ad‐hoc copilots to an AI‐native operating model—and here’s what to act on. A senior engineer published a production‐tested PDCVR loop (Plan‐Do‐Check‐Verify‐Retrospect) using Claude Code and GLM‐4.7 and shared prompts and subagent patterns on GitHub; it turns TDD and PDCA ideas into a model‐agnostic SDLC shell that risk teams in fintech/biotech/critical infra can accept. Teams report layered agent stacks with folder‐level manifests plus a meta‐agent cut routine 1–2 day tasks from ~8 hours to ~2–3 hours. DevScribe surfaces executable workspaces (databases, diagrams, API testing, offline‐first). Data backfills are being formalized into PDCVR flows. Alignment tax and scope creep are now measurable via agents watching Jira/Linear/RFC diffs. Immediate takeaway: pilot PDCVR, folder priors, agent topology, and an executable cockpit; expect AI to become engineering infrastructure over the next 12–24 months.

AI Is Becoming the New OS for Engineering: Inside PDCVR and Agents

Published Jan 3, 2026

Spending more time untangling coordination than shipping features? In the last 14 days (Reddit/GitHub posts dated 2026‐01‐02 and 2026‐01‐03) engineers converged on concrete patterns you can copy: an AI SDLC wrapper called PDCVR (Plan–Do–Check–Verify–Retrospect) formalizes LLM planning, TDD-style failing‐tests, agented verification, and retrospectives; multi‐level agents plus folder‐level manifests and a meta‐agent cut typical 1–2 day tickets from ~8 hours to ~2–3 hours; DevScribe‐like workspaces make docs, DB queries, APIs and tests executable and local‐first (better for regulated stacks); teams are formalizing idempotent backfills and migration runners; and "alignment tax" tooling—agents that track Jira/docs/Slack—aims to reclaim lost coordination time. Bottom line: this is less about which model wins and more about building an AI‐native operating model you can audit, control, and scale.

How AI Became Engineering Infrastructure: PDCVR, Agents, Executable Workspaces

Published Jan 3, 2026

Drowning in rework, missed dependencies, and slow releases? Read this and you’ll get the concrete engineering patterns turning AI from a feature into infrastructure. Over 2026‐01‐02–03 threads and docs, teams described a Plan–Do–Check–Verify–Retrospect (PDCVR) loop (on Claude Code and GLM‐4.7) that makes AI code changes auditable; multi‐level agents with folder‐level priors plus a prompt‐rewriting meta‐agent that cut typical 1–2 day tasks to ~2–3 hours (a 3–4× speedup); DevScribe‐style executable workspaces for code, DBs, and APIs; platformized, idempotent data backfills; tooling to measure the “alignment tax”; and AI todo routers that unify Slack, Jira, and Sentry. If you run critical systems (finance, health, trading), start adopting disciplined loops, folder priors, and observable migration primitives—mastering these patterns matters as much as picking a model.

How AI Became Your Colleague: The New AI-Native Engineering Playbook

Published Jan 3, 2026

If your teams are losing days to rework, pay attention: over Jan 2–3, 2026 engineers shared concrete practices that make AI a predictable, auditable colleague. You get a compact playbook: PDCVR (Plan–Do–Check–Verify–Retrospect) for Claude Code and GLM‐4.7—plan with RED→GREEN TDD, have the model write failing tests and iterate, run completeness checks, use Claude Code sub‐agents to run builds/tests, and log lessons (GitHub templates published 2026‐01‐03). Paired with folder‐level specs and a prompt‐rewriting meta‐agent, 1–2 day tasks fell from ~8 hours to ~2–3 hours (20‐min prompt + a few 10–15 min loops + ~1 hour testing) (Reddit, 2026‐01‐02). DevScribe‐style executable, offline workspaces, reusable migration/backfill frameworks, alignment‐monitoring agents, and AI “todo routers” complete the stack. Bottom line: adopt PDCVR, agent hierarchies, and executable workspaces to cut cycle time and make AI collaboration auditable—and start by piloting these patterns in safety‐sensitive flows.

AI as an Operating System: Building Predictable, Auditable Engineering Workflows

Published Jan 3, 2026

Over the last 14 days practitioners zeroed in on one problem: how to make AI a stable, auditable part of software and data workflows—and this note tells you what changed and what to watch. You’ll see a repeatable Plan–Do–Check–Verify–Retrospect (PDCVR) loop for LLM coding (examples using Claude Code and GLM‐4.7), multi‐level agents with folder‐level manifests plus a prompt‐rewriting meta‐agent, and control‐plane tools (DevScribe) that let docs execute DB queries, diagrams, and API tests. Practical wins: 1–2 day tickets dropped from ~8 hours to ~2–3 hours in one report (Reddit, 2026‐01‐02). Teams are also building data‐migration platforms, quantifying an “alignment tax,” and using AI todo‐routers to aggregate Slack/Jira/Sentry. Bottom line: models matter less than operating models, agent architectures, and tooling that make AI predictable, auditable, and ready for production.

Inside the AI-Native OS Engineers Use to Ship Software Faster

Published Jan 3, 2026

What if you could cut typical 1–2‐day engineering tasks from ~8 hours to ~2–3 while keeping quality and traceability? Over the last two weeks (Reddit posts 2026‐01‐02/03), experienced engineers have converged on practical patterns that form an AI‐native operating model you'll get here: the PDCVR loop (Plan–Do‐Check‐Verify‐Retrospect) enforcing test‐first plans and sub‐agents (Claude Code) for verification; folder‐level manifests plus a meta‐agent that rewrites prompts to respect architecture; DevScribe‐style executable workspaces that pair schemas, queries, diagrams and APIs; treating data backfills as idempotent platform workflows; coordination agents that quantify the “alignment tax”; and AI todo routers consolidating Slack/Jira/Sentry into prioritized work. Together these raise throughput, preserve traceability and safety for sensitive domains like fintech/biotech, and shift migrations and scope control from heroic one‐offs to platform responsibilities. Immediate moves: adopt PDCVR, add folder priors, build agent hierarchies, and pilot an executable workspace.

How Teams Industrialize AI: Agentic Workflows, Executable Docs, and Coordination

Published Jan 3, 2026

Tired of wasted engineering hours and coordination chaos? Over the last two weeks (Reddit threads dated 2026‐01‐02 and 2026‐01‐03, plus GitHub and DevScribe docs), engineering communities shifted from debating models to industrializing AI‐assisted development — practical frameworks, agentic workflows, executable docs, and migration patterns. Key moves: a Plan–Do–Check–Verify‐Retrospect (PDCVR) process using Claude Code and GLM‐4.7 with prompts and sub‐agents on GitHub; multi‐level agents plus folder priors that cut a typical 1–2 day task from ~8 engineer hours to ~2–3 hours; DevScribe’s offline, executable docs for DBs and APIs; and calls to build reusable data‐migration and coordination‐aware tooling to lower the “alignment tax.” If you lead engineering, treat these patterns as operational playbooks now — adopt PDCVR, folder manifests, executable docs, and attention‐aggregators to secure measurable advantage over the next 12–24 months.

Inside PDCVR: How Agentic AI Boosts Engineering 3–4×

Published Jan 3, 2026

Tired of slow, error‐prone engineering cycles? Read on: posts from Jan 2–3, 2026 show senior engineers are codifying agentic coding into a Plan–Do–Check–Verify–Retrospect (PDCVR) workflow—Plan (repo inspection and explicit TDD), Do (tests first, small diffs), Check (compare plan vs. code), Verify (Claude Code sub‐agents run builds/tests), Retrospect (capture mistakes to seed the next plan)—with prompts and agent configs on GitHub. Multi‐level agents (folder‐level manifests plus a prompt‐rewriting meta‐agent) report 3–4× day‐to‐day gains: typical 1–2 day tasks dropped from ~8 hours to ~2–3 hours. DevScribe appears as an executable, local‐first workspace (DB integration, diagrams, API testing). Data migration, the “alignment tax,” and AI todo aggregators are flagged as platform priorities. Teams that internalize these workflows and tools will define the next phase of AI in engineering.

PDCVR and Agentic Workflows Industrialize AI‐Assisted Software Engineering

Published Jan 3, 2026

If your team is losing a day to routine code changes, listen: Reddit posts from 2026‐01‐02/03 show practitioners cutting typical 1–2‐day tasks from ~8 hours to about 2–3 hours by combining a Plan–Do–Check–Verify–Retrospect (PDCVR) loop with multi‐level agents, and this summary tells you what they did and why it matters. PDCVR (reported 2026‐01‐03) runs in Claude Code with GLM‐4.7, forces RED→GREEN TDD in planning, keeps small diffs, uses build‐verification and role subagents (.claude/agents) and records lessons learned. Separate posts (2026‐01‐02) show folder‐level instructions and a prompt‐rewriting meta‐agent turning vague requests into high‐fidelity prompts, giving ~20 minutes to start, 10–15 minutes per PR loop, plus ~1 hour for testing. Tools like DevScribe make docs executable (DB queries, ERDs, API tests). Bottom line: teams are industrializing AI‐assisted engineering; your immediate next step is to instrument reproducible evals—PR time, defect rates, rollbacks—and correlate them with AI use.

LLMs Are Rewriting Software Careers—What Senior Engineers Must Do

Published Dec 6, 2025

Worried AI will quietly eat your engineering org? In the past two weeks (high‐signal Reddit threads around 2025‐12‐06), senior engineers using Claude Opus 4.5, GPT‐5.1 and Gemini 3 Pro say state‐of‐the‐art LLMs already handle complex coding, refactoring, test generation and incident writeups—acting like a tireless junior—forcing a shift from “if” to “how fast.” That matters because mechanical coding is being commoditized while value moves to domain modeling, system architecture, production risk, and team leadership; firms are redesigning senior roles as AI stewards, investing in platform engineering, and rethinking interviews to assess AI orchestration. Immediate actions: treat LLMs as core infrastructure, invest in LLM engineering, domain expertise, distributed systems and AI security, and redraw accountability so senior staff add leverage, not just lines of code.