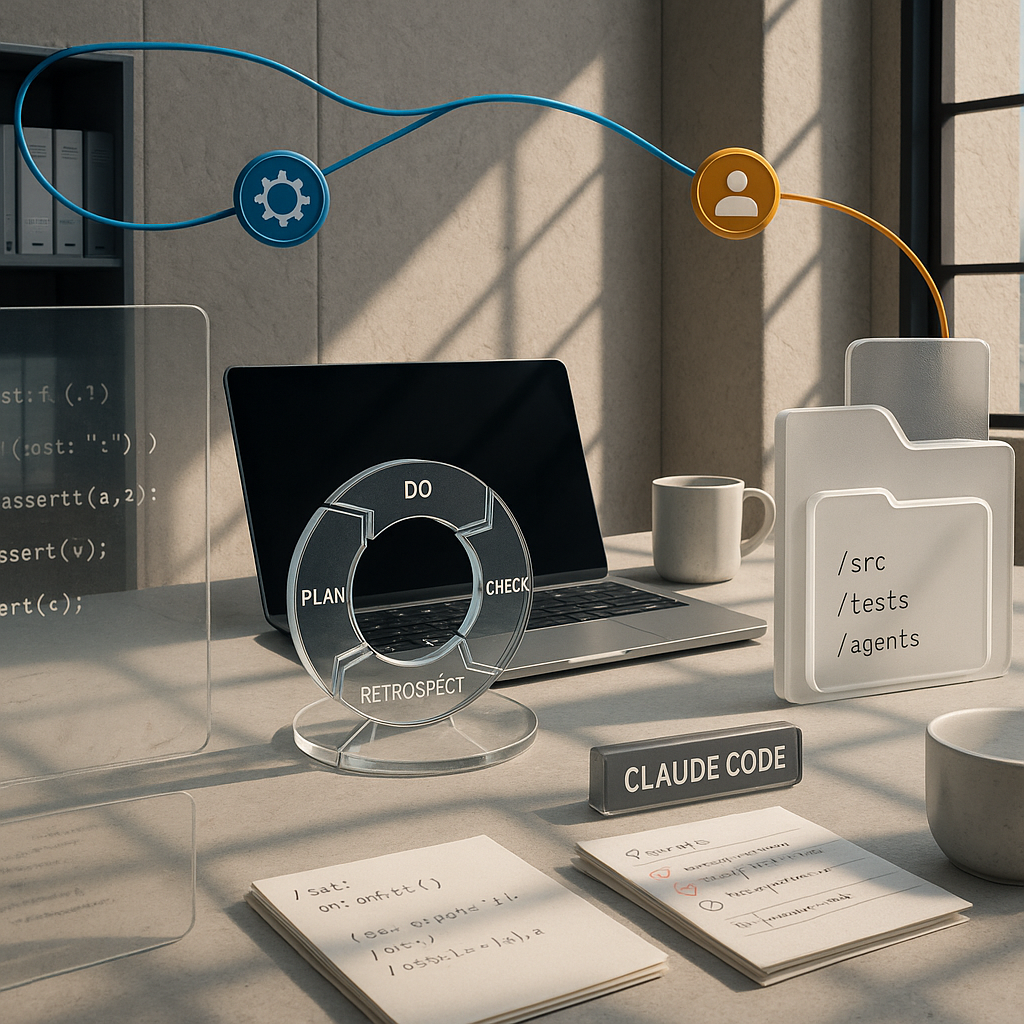

PDCVR and Agentic Workflows Industrialize AI‐Assisted Software Engineering

Published Jan 3, 2026

If your team is losing a day to routine code changes, listen: Reddit posts from 2026‐01‐02/03 show practitioners cutting typical 1–2‐day tasks from ~8 hours to about 2–3 hours by combining a Plan–Do–Check–Verify–Retrospect (PDCVR) loop with multi‐level agents, and this summary tells you what they did and why it matters. PDCVR (reported 2026‐01‐03) runs in Claude Code with GLM‐4.7, forces RED→GREEN TDD in planning, keeps small diffs, uses build‐verification and role subagents (.claude/agents) and records lessons learned. Separate posts (2026‐01‐02) show folder‐level instructions and a prompt‐rewriting meta‐agent turning vague requests into high‐fidelity prompts, giving ~20 minutes to start, 10–15 minutes per PR loop, plus ~1 hour for testing. Tools like DevScribe make docs executable (DB queries, ERDs, API tests). Bottom line: teams are industrializing AI‐assisted engineering; your immediate next step is to instrument reproducible evals—PR time, defect rates, rollbacks—and correlate them with AI use.