AI Is Becoming the New OS for Engineering: Inside PDCVR and Agents

Published Jan 3, 2026

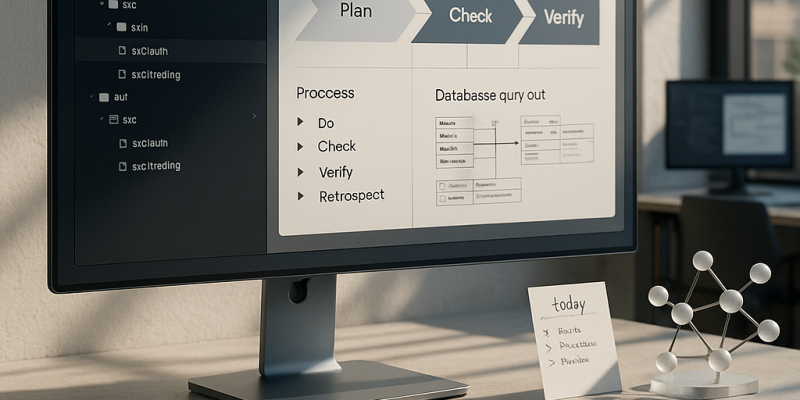

Spending more time untangling coordination than shipping features? In the last 14 days (Reddit/GitHub posts dated 2026‐01‐02 and 2026‐01‐03) engineers converged on concrete patterns you can copy: an AI SDLC wrapper called PDCVR (Plan–Do–Check–Verify–Retrospect) formalizes LLM planning, TDD-style failing‐tests, agented verification, and retrospectives; multi‐level agents plus folder‐level manifests and a meta‐agent cut typical 1–2 day tickets from ~8 hours to ~2–3 hours; DevScribe‐like workspaces make docs, DB queries, APIs and tests executable and local‐first (better for regulated stacks); teams are formalizing idempotent backfills and migration runners; and "alignment tax" tooling—agents that track Jira/docs/Slack—aims to reclaim lost coordination time. Bottom line: this is less about which model wins and more about building an AI‐native operating model you can audit, control, and scale.

How AI Became Your Colleague: The New AI-Native Engineering Playbook

Published Jan 3, 2026

If your teams are losing days to rework, pay attention: over Jan 2–3, 2026 engineers shared concrete practices that make AI a predictable, auditable colleague. You get a compact playbook: PDCVR (Plan–Do–Check–Verify–Retrospect) for Claude Code and GLM‐4.7—plan with RED→GREEN TDD, have the model write failing tests and iterate, run completeness checks, use Claude Code sub‐agents to run builds/tests, and log lessons (GitHub templates published 2026‐01‐03). Paired with folder‐level specs and a prompt‐rewriting meta‐agent, 1–2 day tasks fell from ~8 hours to ~2–3 hours (20‐min prompt + a few 10–15 min loops + ~1 hour testing) (Reddit, 2026‐01‐02). DevScribe‐style executable, offline workspaces, reusable migration/backfill frameworks, alignment‐monitoring agents, and AI “todo routers” complete the stack. Bottom line: adopt PDCVR, agent hierarchies, and executable workspaces to cut cycle time and make AI collaboration auditable—and start by piloting these patterns in safety‐sensitive flows.

Inside the AI-Native OS Engineers Use to Ship Software Faster

Published Jan 3, 2026

What if you could cut typical 1–2‐day engineering tasks from ~8 hours to ~2–3 while keeping quality and traceability? Over the last two weeks (Reddit posts 2026‐01‐02/03), experienced engineers have converged on practical patterns that form an AI‐native operating model you'll get here: the PDCVR loop (Plan–Do‐Check‐Verify‐Retrospect) enforcing test‐first plans and sub‐agents (Claude Code) for verification; folder‐level manifests plus a meta‐agent that rewrites prompts to respect architecture; DevScribe‐style executable workspaces that pair schemas, queries, diagrams and APIs; treating data backfills as idempotent platform workflows; coordination agents that quantify the “alignment tax”; and AI todo routers consolidating Slack/Jira/Sentry into prioritized work. Together these raise throughput, preserve traceability and safety for sensitive domains like fintech/biotech, and shift migrations and scope control from heroic one‐offs to platform responsibilities. Immediate moves: adopt PDCVR, add folder priors, build agent hierarchies, and pilot an executable workspace.

Inside the AI Operating Fabric Transforming Engineering: PDCVR, Agents, Workspaces

Published Jan 3, 2026

Losing time to scope creep and brittle AI output? In the past two weeks engineers documented concrete practices showing AI is becoming the operating fabric of engineering work: PDCVR (Plan–Do–Check–Verify–Retrospect) — documented 2026‐01‐03 for Claude Code and GLM‐4.7 with GitHub prompt templates — gives an AI‐native SDLC wrapper; multi‐agent hierarchies (folder‐level instructions plus a prompt‐rewriting meta‐agent) cut typical 1–2 day monorepo tasks from ~8 hours to ~2–3 hours (reported 2026‐01‐02); DevScribe (2026‐01‐03) offers executable docs (DB queries, diagrams, REST client, offline‐first); engineers pushed reusable data backfill/migration patterns (2026‐01‐02); posts flagged an “alignment tax” on throughput (2026‐01‐02/03); and founders prototyped AI todo routers aggregating Slack/Jira/Sentry (2026‐01‐02). Immediate takeaway: implement PDCVR‐style loops, agent hierarchies, executable workspaces and alignment‐aware infra — and measure impact.

AI as Engineer: From Autocomplete to Process-Aware Collaborator

Published Jan 3, 2026

Your team’s code is fast but fragile — in the last two weeks engineers, not vendors, published practical patterns to make LLMs safe and productive. On 2026‐01‐03 a senior engineer released PDCVR (Plan‐Do‐Check‐Verify‐Retrospect) using Claude Code and GLM‐4.7 with prompts and sub‐agents on GitHub; it embeds planning, TDD, build verification, and retrospectives as an AI‐native SDLC layer for risk‐sensitive systems. On 2026‐01‐02 others showed folder‐level repo manifests plus a prompt‐rewriting meta‐agent that cut routine 1–2‐day tasks from ~8 hours to ~2–3 hours. Tooling shifted too: DevScribe (site checked 2026‐01‐03) offers executable, offline docs with DBs, diagrams, and API testing. Engineers also pushed reusable data‐migration patterns, highlighted the “alignment tax,” and prototyped Slack/Jira/Sentry aggregators. Bottom line: treat AI as a process participant — build frameworks, guardrails, and observability now.

AI Is Becoming the Operating System for Software Teams

Published Jan 3, 2026

Drowning in misaligned work and slow delivery? In the last two weeks senior engineers sketched exactly what’s changing and why it matters: AI is becoming an operating system for software teams, and this summary tells you what to expect and do. Teams are shifting from ad‐hoc prompting to repeatable, auditable frameworks like Plan–Do–Check–Verify–Retrospect (PDCVR) (implemented on Claude Code + GLM‐4.7; prompts and sub‐agents open‐sourced, Reddit 2026‐01‐03), cutting error loops with TDD and build‐verification agents. Hierarchical agents plus folder manifests trim a task from ~8 hours to ~2–3 hours (20‐minute prompt, 2–3 feedback loops, ~1 hour testing). Tools like DevScribe collapse docs, queries, diagrams, and API tests into executable workspaces. Data backfills need platform controllers with checkpointing and rollforward/rollback. The biggest ops win: alignment‐aware dashboards and AI todo aggregators to expose scope creep and speed decisions. Immediate takeaway: harden workflows, add agent tiers, and invest in alignment tooling now.

Inside PDCVR: How Agentic AI Boosts Engineering 3–4×

Published Jan 3, 2026

Tired of slow, error‐prone engineering cycles? Read on: posts from Jan 2–3, 2026 show senior engineers are codifying agentic coding into a Plan–Do–Check–Verify–Retrospect (PDCVR) workflow—Plan (repo inspection and explicit TDD), Do (tests first, small diffs), Check (compare plan vs. code), Verify (Claude Code sub‐agents run builds/tests), Retrospect (capture mistakes to seed the next plan)—with prompts and agent configs on GitHub. Multi‐level agents (folder‐level manifests plus a prompt‐rewriting meta‐agent) report 3–4× day‐to‐day gains: typical 1–2 day tasks dropped from ~8 hours to ~2–3 hours. DevScribe appears as an executable, local‐first workspace (DB integration, diagrams, API testing). Data migration, the “alignment tax,” and AI todo aggregators are flagged as platform priorities. Teams that internalize these workflows and tools will define the next phase of AI in engineering.

PDCVR and Agentic Workflows Industrialize AI‐Assisted Software Engineering

Published Jan 3, 2026

If your team is losing a day to routine code changes, listen: Reddit posts from 2026‐01‐02/03 show practitioners cutting typical 1–2‐day tasks from ~8 hours to about 2–3 hours by combining a Plan–Do–Check–Verify–Retrospect (PDCVR) loop with multi‐level agents, and this summary tells you what they did and why it matters. PDCVR (reported 2026‐01‐03) runs in Claude Code with GLM‐4.7, forces RED→GREEN TDD in planning, keeps small diffs, uses build‐verification and role subagents (.claude/agents) and records lessons learned. Separate posts (2026‐01‐02) show folder‐level instructions and a prompt‐rewriting meta‐agent turning vague requests into high‐fidelity prompts, giving ~20 minutes to start, 10–15 minutes per PR loop, plus ~1 hour for testing. Tools like DevScribe make docs executable (DB queries, ERDs, API tests). Bottom line: teams are industrializing AI‐assisted engineering; your immediate next step is to instrument reproducible evals—PR time, defect rates, rollbacks—and correlate them with AI use.

From Benchmarks to Real Markets: AI's Rise of Multi‐Agent Testbeds

Published Dec 6, 2025

Worried that today’s benchmarks miss real‐world AI risks? Over the last 14 days researchers and platforms have shifted from single‐model IQ tests to rich, multi‐agent, multi‐tool testbeds that mimic markets, dev teams, labs, and ops centers — and this note tells you why that matters and what to do. These environments let multiple heterogeneous agents use tools (shells, APIs, simulators), face partial observability, and create feedback loops, exposing coordination failures, collusion, flash crashes, or brittle workflows. That matters for your revenue, risk, and operations: traders can stress‐test strategies against AI order flow, engineers can evaluate maintainability at scale, and CISOs can run red/blue exercises with audit trails. Immediate actions: learn to design and instrument these testbeds, define clear agent roles, enforce policy layers and human review, and use them as wind‐tunnels before agents touch real money, patients, or infrastructure.

Agentic AI Is Going Pro: Semi‐Autonomous Teams That Ship Code

Published Dec 6, 2025

Burnout from rote engineering tasks is real—and agentic AI is now positioned to change that. Here’s what happened and why you should care: over the last two weeks (and increasingly since early 2025) agent frameworks and AI‐native workflows have matured so models can plan, act through tools, and coordinate—producing multi‐step outcomes (PRs, reports, backtests) rather than single snippets. Teams are using planner, executor, and critic agents to do multi‐file refactors, incident triage, experiment orchestration, and trading research. That matters because it can compress delivery cycles, raise research throughput, and cut time‐to‐insight—if you govern it. Immediate implications: zone autonomy (green/yellow/red), sandbox execution for trading, enforce tool catalogs and observability/audit logs, and prioritize people who can design and supervise these systems; organizations that do this will gain the edge.