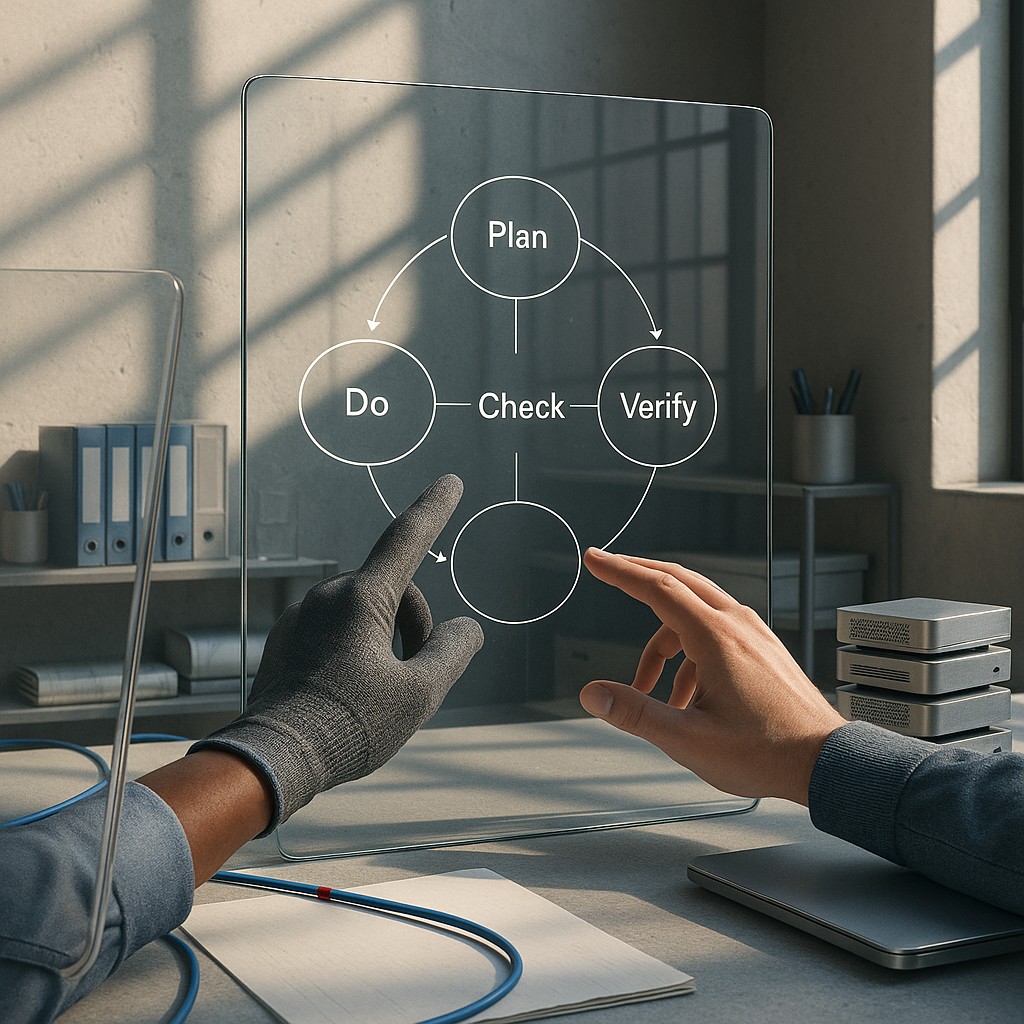

Depending on where you sit, this fortnight reads as proof that AI has crossed into “process‐aware collaborator” or as evidence that the gains come mostly from scaffolding, not smarts. Supporters point to the PDCVR loop—Plan, Do, Check, Verify, Retrospect—rooted in PDCA and TDD research, plus Claude Code sub‐agents and build verification, as a defensible AI‐native SDLC for risk‐sensitive work. They cite structured agent setups with folder‐level instructions and a prompt‐rewriting assistant cutting common tasks from ~8 hours to 2–3, and DevScribe’s offline, executable documentation that keeps databases, ERDs, and API tests in the same local workspace. Skeptics note the same reports concede that “AI slop” still exists [Reddit, 2026‐01‐02]; “lower the chance” of hallucinated architecture is not elimination, and many delays live in the Alignment Tax—scope churn, missing stakeholders, brittle dependencies—rather than in code. Early prototypes like an AI todo that aggregates Slack/Jira/Sentry show promise but also how nascent the coordination layer is. What if the breakthrough isn’t intelligence at all, but scaffolding?

Here’s the twist the evidence supports: constraint, not creativity, is the unlock. The biggest step‐change arrives when models are boxed in—via TDD‐first plans, folder‐level invariants, meta‐prompts that codify context, and local workspaces where docs can run queries and API assertions—so that “fast but fallible teammates” operate inside well‐understood boundaries. The near‐term shift won’t be another monolithic agent; it’s specialized, composable services, data‐migration‐as‐a‐service with idempotence and dashboards, and agents that watch Jira/Linear and Slack for scope drift before it becomes rework. Platform and data engineers, SREs, and EMs will feel it first; CTOs and CISOs in trading, health, and other high‐risk domains should watch for measurable reductions in backfill errors and alignment tax as these loops harden. The edge moves from model choice to how well you choreograph constraints—progress that looks less like a chatbot and more like a checklist that executes itself.