AI-Native SDLC: PDCVR, Agentic Workflows, and Executable Workspaces

Published Jan 3, 2026

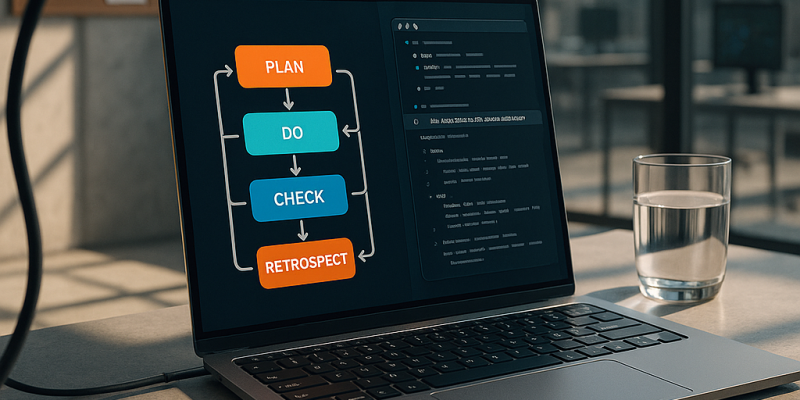

Tired of AI “autocomplete” causing more rework? Reddit threads from 2026‐01‐02–03 show senior engineers wrapping LLMs into repeatable processes—here’s what matters for your org. They describe a Plan–Do–Check–Verify–Retrospect (PDCVR) loop (Claude Code + GLM‐4.7) that enforces TDD stages, separate build/verification agents, and prompt‐template retrospectives for auditability—recommended for fintech, biotech, and safety‐sensitive teams. Others report folder‐level manifests plus a prompt‐rewriting meta‐agent cutting 1–2‐day tasks from ~8 hours to ~2–3 hours (3–4× speedup). Tool trends: DevScribe’s “executable docs,” rising need for robust data‐migration/backfill frameworks, and coordination‐aware agent tooling to reduce weeks‐long alignment tax. Engineers now demand reproducible evals, exact prompts, and task‐level metrics; publish prompt libraries and benchmarks, and build verification and migration frameworks as immediate next steps.

PDCVR and Agentic Workflows Industrialize AI‐Assisted Software Engineering

Published Jan 3, 2026

If your team is losing a day to routine code changes, listen: Reddit posts from 2026‐01‐02/03 show practitioners cutting typical 1–2‐day tasks from ~8 hours to about 2–3 hours by combining a Plan–Do–Check–Verify–Retrospect (PDCVR) loop with multi‐level agents, and this summary tells you what they did and why it matters. PDCVR (reported 2026‐01‐03) runs in Claude Code with GLM‐4.7, forces RED→GREEN TDD in planning, keeps small diffs, uses build‐verification and role subagents (.claude/agents) and records lessons learned. Separate posts (2026‐01‐02) show folder‐level instructions and a prompt‐rewriting meta‐agent turning vague requests into high‐fidelity prompts, giving ~20 minutes to start, 10–15 minutes per PR loop, plus ~1 hour for testing. Tools like DevScribe make docs executable (DB queries, ERDs, API tests). Bottom line: teams are industrializing AI‐assisted engineering; your immediate next step is to instrument reproducible evals—PR time, defect rates, rollbacks—and correlate them with AI use.

Why Persistent Agentic AI Will Transform Production — and What Could Go Wrong

Published Dec 30, 2025

In the last two weeks agentic AI crossed a threshold: agents moved from chat windows into persistent work on real production surfaces—codebases, data infra, trading research loops and ops pipelines—and that matters because it changes how your teams create value and risk. You’ll get: what happened, why now, concrete patterns, and immediate design rules. Three enablers converged in the past 14 days—tool‐calling + long context, mature agent frameworks, and pressure to show 2–3× gains—so teams are running agents that watch repos, open PRs, run backtests, monitor P&L, and triage data quality. Key risks: scope drift, hidden coupling, and security/data exposure. What to do now: give each agent a narrow mandate, least‐privilege tools, human‐in‐the‐loop gates, SLOs, audit logs and metrics that measure PR acceptance, cycle time, and incidents—treat agents as owned services, not autonomous teammates.

From Chatbots to Core: LLMs Become Dev Infrastructure

Published Dec 6, 2025

If your teams are still copy‐pasting chatbot output into editors, you’re living the “vibe coding” pain—massive, hard‐to‐audit diffs and hidden logic changes have pushed many orgs to rethink workflows. Here’s what happened in the last two weeks and what it means for you: engineers are treating LLMs as first‐class infrastructure—repo‐aware agents that index code, tests, configs and open contextual PRs; AI running in CI to review code, generate tests, and gate large PRs; and AI copilots parsing logs and drafting postmortems. That shift boosts productivity but raises real risk in fintech, trading, biotech (e.g., pandas→polars rewrites, pre‐trade check drift). Immediate responses: zone repos (green/yellow/red), log every AI action, and enforce policy engines (on‐prem/VPC for sensitive code). Watch for platform announcements and practitioner case studies to track adoption.

From Giant LLMs to Micro‐AI Fleets: The Distillation Revolution

Published Dec 6, 2025

Paying multi‐million‐dollar annual run‐rates to call giant models? Over the last 14 days the field has accelerated toward systematically distilling big models into compact specialists you can run cheaply on commodity hardware or on‐device, and this summary shows what’s changed and what to do. Recent preprints (2025‐10 to 2025‐12) and reproductions show 1–7B‐parameter students matching teachers on narrow domains while using 4–10× less memory and often 2–5× faster with under 5–10% loss; FinOps reports (through 2025‐11) flag multi‐million‐dollar inference costs; OEM benchmarks show sub‐3B models can hit interactive latency on devices with tens–low‐hundreds TOPS NPUs. Why it matters: lower cost, better latency, and privacy transform trading, biotech, and dev tools. Immediate moves: define task constraints (latency <50–100 ms, memory <1–2 GB), build distillation pipelines, centralize registries, and enforce monitoring/MBOMs.

Multimodal AI Is Becoming the Universal Interface for Complex Workflows

Published Dec 6, 2025

If you’re tired of stitching OCR, ASR, vision models, and LLMs together, pay attention: in the last 14 days major providers pushed multimodal APIs and products into broad preview or GA, turning “nice demos” into a default interface layer. You’ll get models that accept text, images, diagrams, code, audio, and video in one call and return text, structured outputs (JSON/function calls), or tool actions — cutting brittle pipelines for engineers, quants, fintech teams, biotech labs, and creatives. Key wins: cross‐modal grounding, mixed‐format workflows, structured tool calling, and temporal video reasoning. Key risks: harder evaluation, more convincing hallucinations, and PII/compliance challenges that may force on‐device or on‐prem inference. Watch for multimodal‐default SDKs, agent frameworks with screenshot/PDF/video support, and domain benchmarks; immediate moves are to think multimodally, redesign interfaces, and add validation/safety layers.

Why Small, On‐Device "Distilled" AI Will Replace Cloud Giants

Published Dec 6, 2025

Cloud inference bills and GPU scarcity are squeezing margins — want a cheaper, faster alternative? Over the past two weeks research releases, open‐source projects, and hardware roadmaps have pushed the industrialization of distilled, on‐device and domain‐specific AI. Large teachers (100B+ params) are being compressed into student models (often 1–3B) via int8/int4/binary quantization and pruning to meet targets like <50 ms latency and <1 GB RAM, running on NPUs and compact accelerators (tens of TOPS). That matters for fintech, trading, biotech, devices, and developer tooling: lower latency, better privacy, easier regulatory proofs, and offline operation. Immediate actions: build distillation + evaluation pipelines, adopt model catalogs and governance, and treat model SBOMs as security hygiene. Watch for risks: harder benchmarking, fragmentation, and supply‐chain tampering. Mastering this will be a 2–3 year competitive edge.

Programmable Sound: AI Foundation Models Are Rewriting Music and Game Audio

Published Dec 6, 2025

Tired of wrestling with flat, uneditable audio tracks? Over the last 14 days major labs and open‐source communities converged on foundation audio models that treat music, sound and full mixes as editable, programmable objects—backed by code, prompts and real‐time control—here’s what that means for you. These scene‐level, stem‐aware models can separate/generate stems, respect structure (intro/verse/chorus), follow MIDI/chord constraints, and edit parts non‐destructively. That shift lets artists iterate sketches and swap drum textures without breaking harmonies, enables adaptive game and UX soundtracks, and opens audio agents for live scoring or auto‐mixing. Risks: style homogenization, data provenance and legal ambiguity, and latency/compute tradeoffs. Near term (12–24 months) action: treat models as idea multipliers, invest in unique sound data, prioritize controllability/low‐latency integrations, and add watermarking/provenance for safety.

LLMs Are Rewriting Software Careers—What Senior Engineers Must Do

Published Dec 6, 2025

Worried AI will quietly eat your engineering org? In the past two weeks (high‐signal Reddit threads around 2025‐12‐06), senior engineers using Claude Opus 4.5, GPT‐5.1 and Gemini 3 Pro say state‐of‐the‐art LLMs already handle complex coding, refactoring, test generation and incident writeups—acting like a tireless junior—forcing a shift from “if” to “how fast.” That matters because mechanical coding is being commoditized while value moves to domain modeling, system architecture, production risk, and team leadership; firms are redesigning senior roles as AI stewards, investing in platform engineering, and rethinking interviews to assess AI orchestration. Immediate actions: treat LLMs as core infrastructure, invest in LLM engineering, domain expertise, distributed systems and AI security, and redraw accountability so senior staff add leverage, not just lines of code.

AI-Native Trading: Models, Simulators, and Agentic Execution Take Over

Published Dec 6, 2025

Worried you’ll be outpaced by AI-native trading stacks? Read this and you’ll know what changed and what to do. In the past two weeks industry moves and research have fused large generative models, high‐performance market simulation, and low‐latency execution: NVIDIA says over 50% of new H100/H200 cluster deals in financial services list trading and generative AI as primary workloads (NVIDIA, 2025‐11), and cloud providers updated GPU stacks in 2025‐11–2025‐12. New tools can generate tens of thousands of synthetic years of limit‐order‐book data on one GPU, train RL agents against co‐evolving adversaries, and oversample crisis scenarios—shifting training from historical backtests to simulated multiverses. That raises real risks (opaque RL policies, strategy monoculture from LLM‐assisted coding, data leakage). Immediate actions: inventory generative dependencies, segregate research vs production models, enforce access controls, use sandboxed shadow mode, and monitor GPU usage, simulator open‐sourcing, and AI‐linked market anomalies over the next 6–12 months.