AI Moves Into Production: Agents, Multimodal Tools, and Regulated Workflows

Published Jan 4, 2026

Struggling to balance speed, cost and risk in production AI? Between Dec 20, 2024 and Jan 2, 2025, vendors pushed hard on deployable, controllable AI and domain integrations—OpenAI’s o3 (Dec 20) made “thinking time” a tunable control for deep reasoning; IDEs and CI tools (GitHub, JetBrains, Continue.dev, Cursor) shipped multimodal, multi-file coding assistants; quantum vendors framed progress around logical qubits; biotech groups moved molecule design into reproducible pipelines; imaging AI saw regulatory deployments; finance focused AI on surveillance and research co-pilots; and security stacks pushed memory-safe languages and SBOMs. Why it matters: you’ll face new cost models (per-second + per-token), SLO and safety decisions, governance needs, interoperability and audit requirements, and shifts from model work to pipeline and data engineering. Immediate actions: set deliberation policies, treat assistants as production services with observability and access controls, and track standardization/benchmarks (TDC, regulatory evidence).

Forget New Models — The Real AI Race Is Infrastructure

Published Jan 4, 2026

If your teams still treat AI as experiments, two weeks of industry moves (late Dec 2024) show that's no longer enough: vendors shifted from line‐level autocomplete to agentic, multi‐file coding pilots (Sourcegraph 12‐23; Continue.dev 12‐27; GitHub Copilot Workspace private preview announced 12‐20), Qualcomm, Apple patent filings, and Meta each published on‐device LLM roadmaps (12‐22–12‐26), and quantum, biotech, healthcare, fintech, and platform teams all emphasized production metrics and infrastructure over novel models. What you get: a clear signal that the frontier is operationalization—platformized LLM gateways, observability, governance, on‐device/cloud tradeoffs, logical‐qubit KPIs, and integrated drug‐discovery and clinical imaging pipelines (NHS: 100+ hospitals, 12‐23). Immediate next steps: treat AI as a shared service with controls and telemetry, pilot agentic workflows with human‐in‐the‐loop safety, and align architectures to on‐device constraints and regulatory paths.

Laptops and Phones Can Now Run Multimodal AI — Here's Why

Published Jan 4, 2026

Worried about latency, privacy, or un‐auditable AI in your products? In the last two weeks vendors shifted multimodal and compiler work from “cloud‐only” to truly on‐device: Apple’s MLX added optimized kernels and commits (2024‐12‐28 to 2025‐01‐03) and independent llama.cpp benchmarks (2024‐12‐30) show a 7B model at ~20–30 tokens/s on M1/M2 at 4‐bit; Qualcomm’s Snapdragon 8 Gen 4 cites up to 45 TOPS (2024‐12‐17) and MediaTek’s Dimensity 9400 >60 TOPS (2024‐11‐18). At the same time GitHub (docs 2024‐12‐19; blog 2025‐01‐02) and JetBrains (2024‐12‐17, 2025‐01‐02) push plan–execute–verify agents with audit trails, while LangSmith (2024‐12‐22) and Arize Phoenix (commits through 2024‐12‐27) make LLM traces and evals first‐class. Practical takeaway: target hybrid architectures—local summarization/intent on-device, cloud for heavy retrieval—and bake in tests, traces, and governance now.

From Copilots to Pipelines: AI Enters Professional Infrastructure

Published Jan 4, 2026

Tired of copilots that only autocomplete? In the two weeks from 2024‐12‐22 to 2025‐01‐04 the market moved: GitHub Copilot Workspace (public preview, rolling since 2024‐12‐17) and Sourcegraph Cody 1.0 pushed agentic, repo‐scale edits and plan‐execute‐verify loops; Qualcomm, Apple, and mobile LLaMA work targeted sub‐10B on‐device latency; IBM, Quantinuum, and PsiQuantum updated roadmaps toward logical qubits (late‐December updates); DeepMind’s AlphaFold 3 tooling and OpenFold patched production workflows; Epic/Nuance DAX Copilot and Mayo Clinic posted deployments reducing documentation time; exchanges and FINRA updated AI surveillance work; LangSmith, Arize Phoenix and APM vendors expanded LLM observability; and hiring data flagged platform‐engineering demand. Why it matters: AI is being embedded into operations, so expect impacts on code review, test coverage, privacy architecture, auditability, and staffing. Immediate takeaway: prioritize observability, audit logs, on‐device‐first designs, and platform engineering around AI services.

AI Rewrites Engineering: From Autocomplete to Operating System

Published Jan 3, 2026

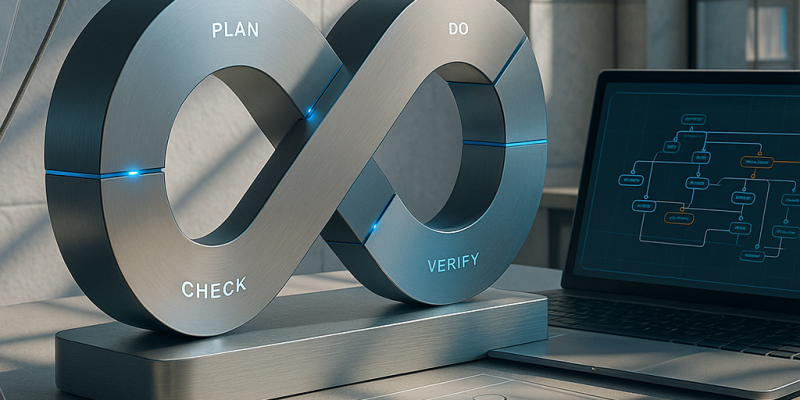

Engineers are reporting a productivity and governance breakthrough: in the last 14 days (posts dated 2026‐01‐02/03) practitioners described a repeatable blueprint—PDCVR (Plan–Do–Check–Verify–Retrospect), folder‐level policies, meta‐agents, and execution workspaces like DevScribe—that moves LLMs and agents from “autocomplete” to an engineering operating model. You get concrete wins: open‐sourced PDCVR prompts and Claude Code agents on GitHub (2026‐01‐03), Plan+TDD discipline, folder manifests that prevent architectural drift, and a meta‐agent that cuts a typical 1–2 day ticket from ≈8 hours to ~2–3 hours. Teams also framed data backfills as governed workflows and named “alignment tax” as a coordination problem agents can monitor. If you care about velocity, risk, or compliance in fintech/trading/digital‐health, the immediate takeaway is clear: treat AI as an architectural question—adopt PDCVR, folder priors, executable docs, governed backfills, and alignment‐watching agents.

How AI Became the Governed Worker Powering Modern Engineering Workflows

Published Jan 3, 2026

Teams are turning AI from an oracle into a governed worker—cutting typical 1–2 day, ~8‐hour tickets to about 2–3 hours—by formalizing workflows and agent stacks. Over Jan 2–3, 2026 practitioners documented a Plan–Do–Check–Verify–Retrospect (PDCVR) loop that makes LLMs produce stepwise plans, RED→GREEN tests, self‐audits, and uses clustered Claude Code sub‐agents to run builds and verification. Folder‐level manifests plus a meta‐agent rewrite short prompts into file‐specific instructions, reducing architecture‐breaking edits and speeding throughput (≈20 minutes to craft the prompt, 2–3 short feedback loops, ~1 hour manual testing). DevScribe‐style workspaces let agents execute queries, tests and view schemas offline. The same patterns apply to data backfills and to lowering the measurable “alignment tax” by surfacing dependencies and missing reviewers. Bottom line: your advantage will come from designing the system that bounds and measures AI, not just picking a model.

Agentic AI Is Rewriting Software Operating Models

Published Jan 3, 2026

Ever lost hours to rework because an LLM dumped a giant, unreviewable PR? The article synthesizes Jan 2–3, 2026 practitioner threads into a concrete AI operating model you can use: a PDCVR (Plan–Do–Check–Verify–Retrospect) loop for Claude Code + GLM‐4.7 that enforces test‐driven steps, small diffs, agented verification (Orchestrator, DevOps, Debugger, etc.), and logged retrospectives (GitHub prompts and sub‐agents published 2026‐01‐03). It pairs temporal discipline with spatial controls: folder‐level manifests plus a meta‐agent that expands short human intents into detailed prompts—cutting typical 1–2 day tasks from ~8 hours to ~2–3 hours (20 min meta‐prompt, 2–3 feedback loops, ~1 hr manual testing). Complementary pieces: DevScribe as an offline executable cockpit (DBs, APIs, diagrams), reusable data‐migration primitives for controlled backfills, and “coordination‐watching” agents to measure the alignment tax. Bottom line: these patterns form the first AI‐native operating model—and that’s where competitive differentiation will emerge for fintech, trading, and regulated teams.

Agentic AI Becomes Your Engineering Runtime: PDCVR, Agents, DevScribe

Published Jan 3, 2026

Worried your teams will waste weeks while competitors treat AI as a runtime, not a toy? In the last two weeks (Jan 2–3, 2026) engineering communities converged on a clear AI‐native operating model you can use now: a Plan–Do–Check–Verify–Retrospect (PDCVR) loop (used with Claude Code + GLM‐4.7) that turns LLMs into fast, reviewable junior devs; folder‐level instruction manifests plus a meta‐agent that rewrites short human prompts into thorough tasks (reducing a typical 1–2 day ticket from ~8 hours to ~2–3 hours); DevScribe‐style executable workspaces for local DB/API/diagram execution; explicit data‐migration/backfill platforms; and “alignment tax” agents that watch scope and dependencies. Why it matters: this shifts where you get advantage—from model choice to how you design and run the operating model—and these patterns are already becoming standard in fintech/trading and safety‐critical stacks.

How AI Becomes Infrastructure: PDCVR, Agent Hierarchies, and Executable Workspaces

Published Jan 3, 2026

Feeling like AI adds chaos, not speed? In the past 14 days engineers and researchers have pushed AI down the stack into infrastructure: they’re building AI‐native operating models — PDCVR loops (Plan‐Do‐Check‐Verify‐Retrospect) using Claude Code with GLM‐4.7, folder‐level manifests, meta‐agents, and verification agents (Reddit/GitHub posts 2026‐01‐02–03). PDCVR enforces RED→GREEN TDD steps, offloads verification to .claude/agents, and feeds retrospects back into planning. Folder priors plus a meta‐agent cut typical 1–2‐day tasks from ~8 hours to ~2–3 hours (~20 min initial prompt, 2–3 short feedback loops, ~1 hour testing). DevScribe workspaces (verified 2026‐01‐03) host DBs, diagrams, API testing and offline execution. Teams are also standardizing data backfills and measuring an “alignment tax” from scope creep. The takeaway: don’t chase the fastest model — design the most robust AI‐native operating model for your org.

How AI Became the Engineering Operating System: PDCVR, Agents, Workspaces

Published Jan 3, 2026

In the past 14 days engineers shifted from treating LLMs as sidecar chatbots to embedding them as an operating layer—here’s what you’ll get: a concrete, auditable AI‐native engineering model and clear operational wins. A senior engineer published a Plan–Do–Check–Verify–Retrospect (PDCVR) workflow for Claude Code + GLM‐4.7 on Reddit (2026‐01‐03) with open prompts and agent configs on GitHub, turning LLMs into repeatable TDD‐driven loops. Teams add folder‐level priors and a prompt‐rewriting meta‐agent to keep architecture intact; one report cut small‐change cycle time from ~8 hours to ~2–3 hours. DevScribe (2026‐01‐03) offers an offline, executable cockpit for DBs/APIs and diagrams. Practitioners also call for treating data backfills as platform features (2026‐01‐02) and using coordination agents to reduce the “alignment tax” (2026‐01‐02/03). The takeaway: the question isn’t which model, but how you design, instrument, and evolve the workflows where models and agents live.