The Shift to Domain‐Specific Foundation Models Every Tech Leader Must Know

Published Dec 6, 2025

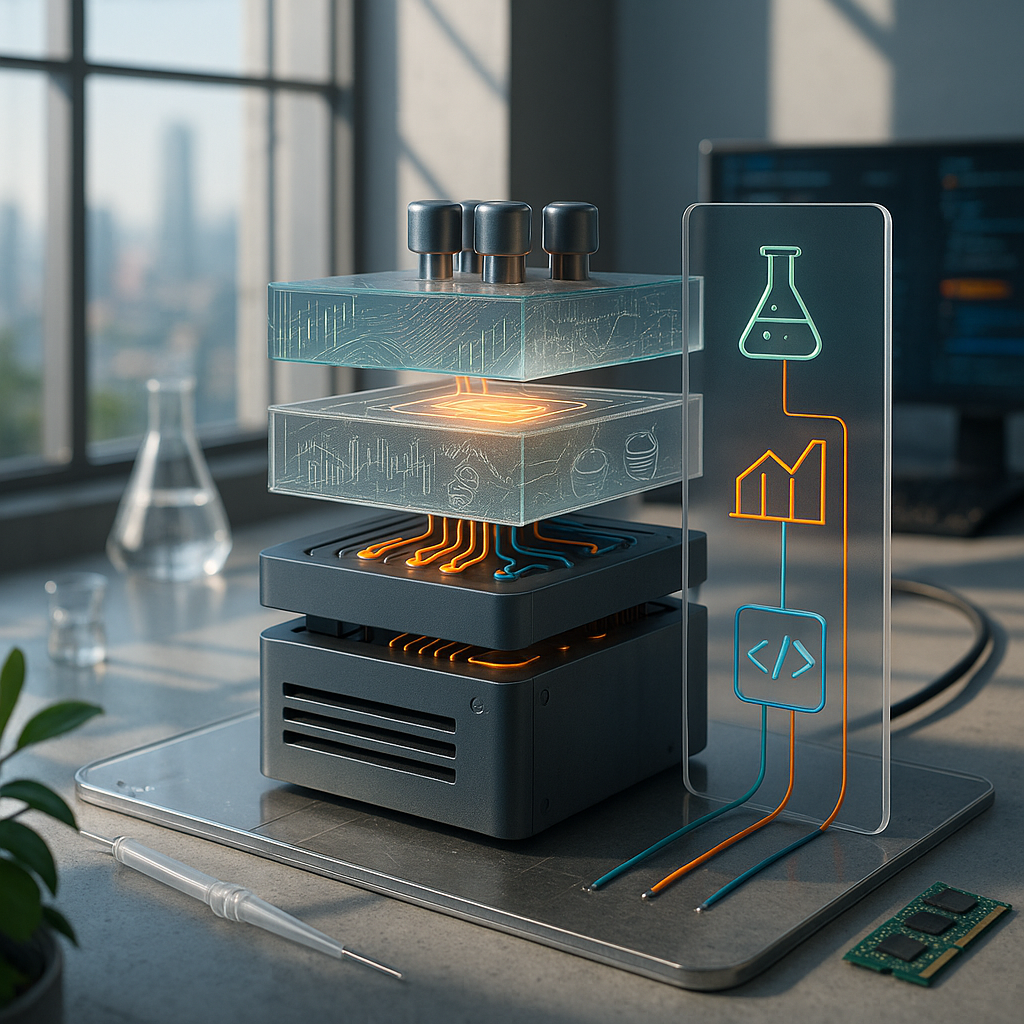

If your teams still bet on generic LLMs, you're facing diminishing returns — over the last two weeks the industry has accelerated toward enterprise‐grade, domain‐specific foundation models. You’ll get why this matters, what these stacks look like, and what to watch next. Three forces drove the shift: generic models stumble on niche terminology and protocol rules; high‐quality domain datasets have matured over the last 2–3 years; and tooling for safe adaptation (secure connectors, parameter‐efficient tuning like LoRA/QLoRA, retrieval, and domain evals) is now enterprise ready. Practically, stacks layer a base foundation model, domain pretraining/adaptation, retrieval/tools (backtests, lab instruments, CI), and guardrails. Impact: better correctness, calibrated outputs, and tighter integration into trading, biotech, and engineering workflows — but watch data bias, IP leakage, and regulatory guardrails. Immediate signs to monitor: vendor domain‐tuning blueprints, open‐weight domain models, and platform tooling that treats adaptation and eval as first‐class.