Federal vs. State AI Regulation: The New Tech Governance Battleground

Published Nov 16, 2025

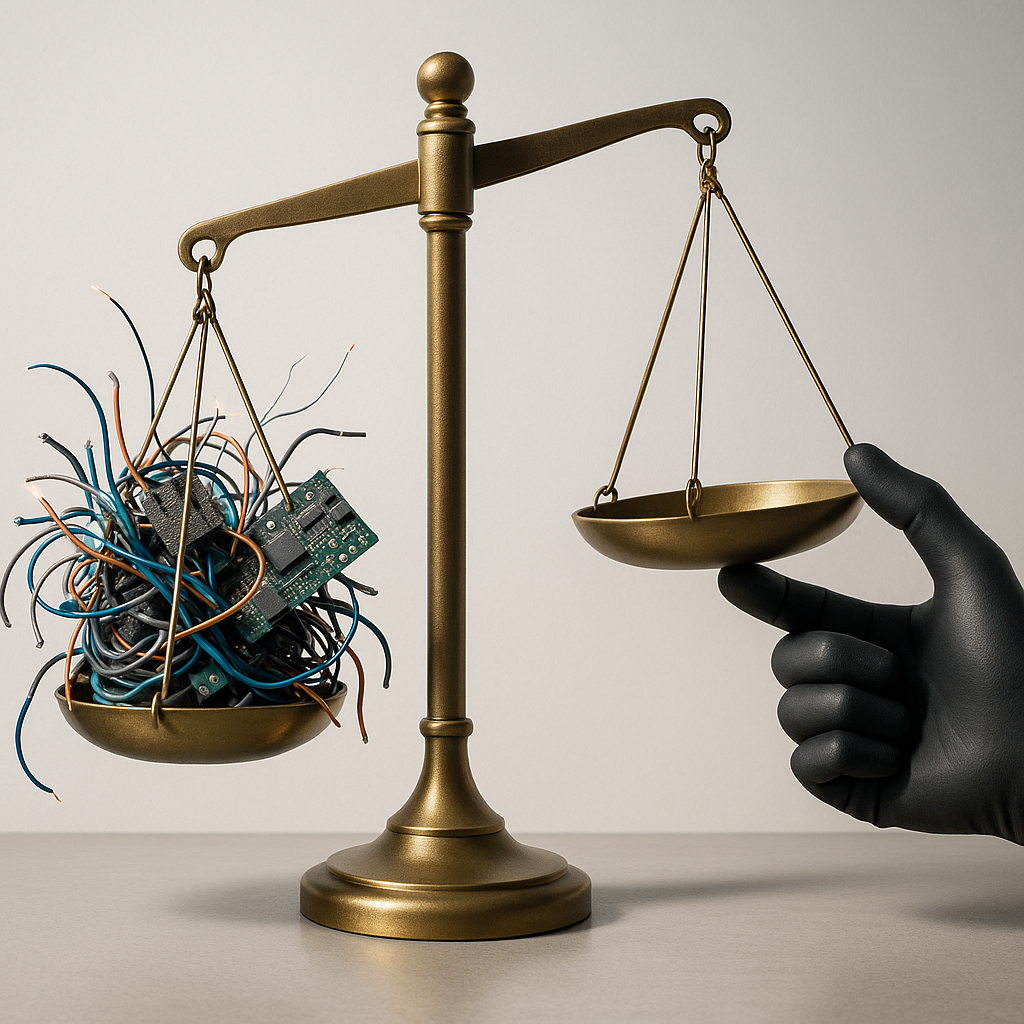

On 2025-07-01 the U.S. Senate voted 99–1 to remove a proposed 10-year moratorium on state AI regulation from a major tax and spending bill, preserving states’ ability to pass and enforce AI-specific laws after a revised funding-limitation version also failed; that decision sustains regulatory uncertainty and keeps states functioning as policy “laboratories” (e.g., California’s SB-243 and state deepfake/impersonation laws). The outcome matters for customers, revenue and operations because fragmented state rules will shape product requirements, compliance costs, liability and market access across AI, software engineering, fintech, biotech and quantum applications. Immediate priorities: monitor federal bills and state law developments, track standards and agency rulemaking (FTC, FCC, ISO/NIST/IEEE), build compliance and auditability capabilities, design flexible architectures, and engage regulators and public comment processes.