AI Goes Backend: Agentic Workflows, On‐Device Models, Platform Pressure

Published Jan 4, 2026

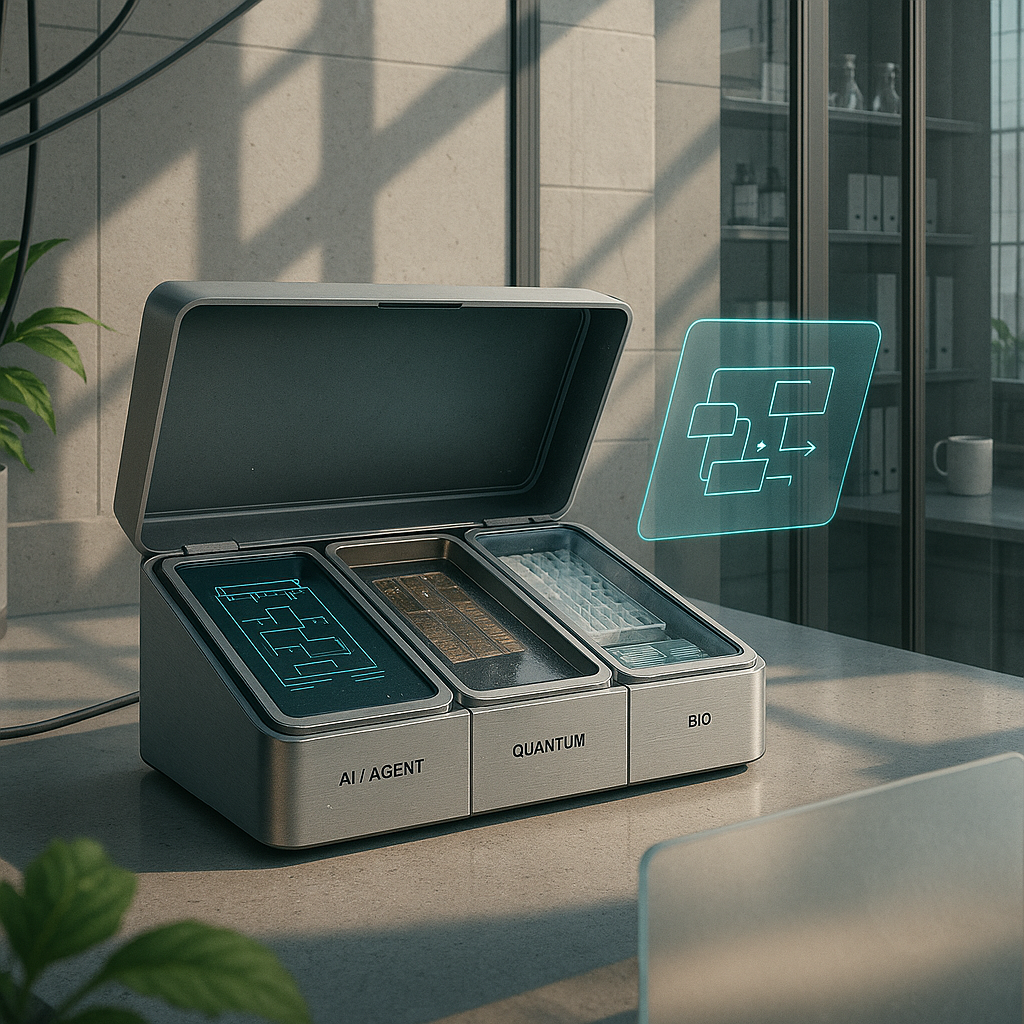

Two weeks of signals show the game shifting from “bigger model wins” to “who wires the model into a reliable workflow.” You get: Anthropic launched Claude 3.7 Sonnet on 2025‐12‐19 as a tool‐using backend for multi‐step program synthesis and API workflows; OpenAI’s o3 mini (mid‐December) added controllable reasoning depth; Google’s Gemini 2.0 Flash and on‐device families (Qwen2.5, Phi‐4, Apple tooling) push low‐latency and edge tiers. Quantum vendors (Quantinuum, QuEra, Pasqal) now report logical‐qubit and fidelity metrics, while Qiskit/Cirq focus on noise‐aware stacks. Biotech teams are wiring AI into automated labs and trials; imaging, scribes, and EHR integrations roll out in Dec–Jan. For ops and product leaders, the takeaway is clear: invest in orchestration, observability, supply‐chain controls, and hybrid model routing—that’s where customer value and risk management live.