LLMs Are Rewriting Software Careers: From Coders to AI‐Orchestrators

Published Dec 6, 2025

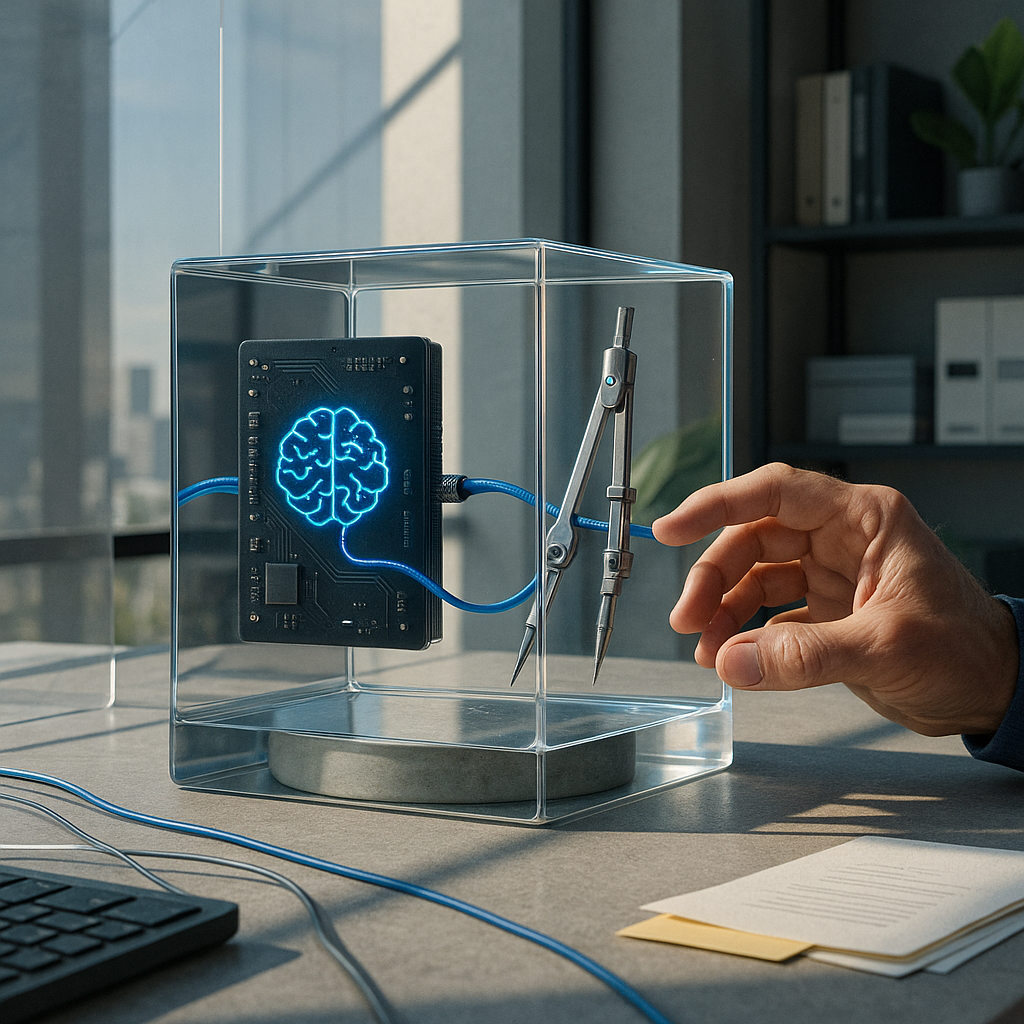

Over the past two weeks a widely read 2025‐12‐06 post from a senior engineer on r/ExperiencedDevs — using Claude Opus 4.5, GPT‐5.1 and Gemini 3 Pro in daily work — argues modern LLMs already do complex coding, large refactors, debugging and documentation in production‐adjacent settings; here’s what you need to know. This matters because routine CRUD, migrations and test scaffolding are increasingly automatable, implying fewer classic entry‐ and mid‐level roles, pressure on hiring and cost structures, and higher value for people who combine deep domain knowledge, system architecture and AI‐orchestration. Humans still dominate domain modeling, non‐functional tradeoffs and accountability. Immediate actions: treat LLMs as core tools, retrain hiring/training toward domain and systems skills, have AI engineers build safe agentic workflows, and watch hiring patterns, job descriptions and headcount trends for confirmation.